Entity Framework Code First Migrations: Alpha - NuGet Package of the Week #10

Hot on the heels of my RFC blog post on product versioning, the Entity Framework team has released Entity Framework 4.1 Code First Migrations: August 2011 CTP. Cool. And it's July, too!

Or my preferred product name, "Migrating Magic Unicorns 0.5." It's probably best to think of this as 0.5 Alpha Migrations for EF but that's my guess at a name and not nearly as descriptive.

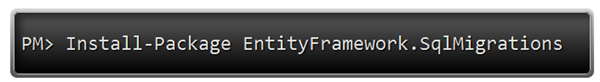

I showed early daily builds of EF Migrations at a few conferences recently, and encouraged folks to comment on the ADO.NET team blog. Now they've released bits for us to play with. This initial CTP is available via NuGet as the EntityFramework.SqlMigrations package.

Here's the general idea. Be aware that this is NOT specific to the Web. You can do this in a Console App or whatever. I just like Hello World Web applications.

Short Walkthrough

Make a new ASP.NET MVC app. Go to Manage NuGet Packages from References and search for EntityFramework.SqlMigrations. Note the dependency it has. It'll upgrade your project's EntityFramework package as well.

Now, make a new class with a simple model:

namespace MvcApplication15.Models

{

public class Person

{

public int PersonId { get; set; }

public string Name { get; set; }

}

public class DemoContext : DbContext

{

public DbSet<Person> People { get; set; }

}

}

Scaffold out a quick Person Controller...

Visit it at /Person and make a few People.

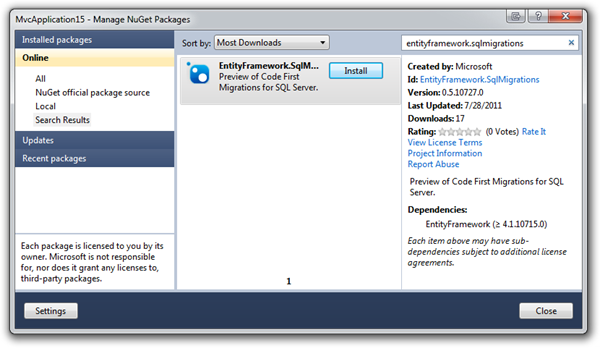

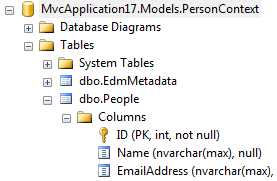

The database that was created looks like this. There's a People table and the First column that is an nvarchar.

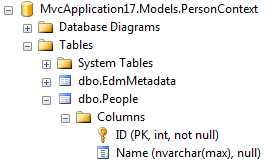

Now, let's add an Email field. I'll update the Person class to include Email:

public class Person

{

public int ID { get; set; }

public string Name { get; set; }

public string Email { get; set; }

}

Then, I'll compile and from the console inside Visual Studio, I'll do this:

PM> update-database

No pending custom scripts found.

Ensuring database matches current model.

- Performing automatic upgrade of database.

- Starting rebuilding table [dbo].[EdmMetadata]...

- Caution: Changing any part of an object name could break scripts and stored procedures.

- Starting rebuilding table [dbo].[People]...

- Caution: Changing any part of an object name could break scripts and stored procedures.

- Update complete.

I typed update-database, that's all. This is an automatic migration. See how the system compare the .NET type and the database and did what needed to be done:

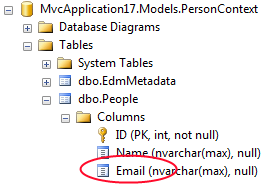

Now, let's rename Email to EmailAddress. If I change the Person...

public class Person

{

public int ID { get; set; }

public string Name { get; set; }

public string EmailAddress { get; set; }

}

And type update-database...

PM> update-database

No pending custom scripts found.

Ensuring database matches current model.

- Performing automatic upgrade of database.

Update-Database : - .Net SqlClient Data Provider: Msg 50000, Level 16, State 127, Line 6 Rows were detected. The schema update is terminating because data loss might occur.

At line:1 char:16

+ Update-Database <<<<

+ CategoryInfo : NotSpecified: (:) [Update-Database], Exception

+ FullyQualifiedErrorId : UnhandledException,System.Data.Entity.Migrations.Commands.MigrateCommand

I'm told that data loss may occur. It can't tell that I want to rename that column or not. It doesn't know what it was before and what it wants to be. Maybe I want to drop Email and add EmailAddress? Who knows. Let me be explicit and give Migrations more context.

PM> Update-Database -Renames:"Person.Email=>Person.EmailAddress"

No pending custom scripts found.

Ensuring database matches current model.

- Performing automatic upgrade of database.

- The following operation was generated from a refactoring log file d5598498-a656-4ccd-1e93-bea562ab6e31

- Rename [dbo].[People].[Email] to EmailAddress

- Caution: Changing any part of an object name could break scripts and stored procedures.

- Update complete.

I'm not sure if I like that Renames: syntax. I'm sure the team would be interested in your opinion. But that works, as I can see in the database.

What Changes Can Migrations Detect Automatically?

From the ADO.NET blog:

Here is the full list of changes that migrations can take care of automatically:

- Adding a property or class

- Nullable columns will be assigned a value of null for any existing rows of data

- Non-Nullable columns will be assigned the CLR default for the given data type for any existing rows of data

- Renaming a property or class

- See ‘Renaming Properties & Classes’ for the additional steps required here

- Renaming an underlying column/table without renaming the property/class

(Using data annotations or the fluent API)

- Migrations can automatically detect these renames without additional input

- Removing a property

- See ‘Automatic Migrations with Data Loss’ section for more information

Moving to Staging/Production/etc

Once dev is correct, when you want to move to production, you would generate a script for your other database by doing a diff between what that DB looks like and what the code looks like.

For example, -script generates a script I can run myself with osql.exe or whatever.

update-database -Script -ConnectionString "SERVER=.\SQLEXPRESS;Database=PersonProd;Trusted_Connection=true;"

Which spits out something like (but more complex than) this:

CREATE TABLE [dbo].[People] (

[ID] INT IDENTITY (1, 1) NOT NULL,

[Name] NVARCHAR (MAX) NULL,

[EmailAddress] NVARCHAR (MAX) NULL,

PRIMARY KEY CLUSTERED ([ID] ASC)

);

Call for Feedback

The EF Team has also this specific call for feedback when it comes to Custom Scripts:

Call for Feedback: From what we are seeing in our own internal use we don’t anticipate that custom scripts will be required very often. However, our efforts are somewhat sheltered from the ‘real world’ so we would love feedback on situations where you need to use custom scripts. In particular we are interested if there are significant scenarios where a code based alternative to writing raw SQL would be beneficial.

Leave your thoughts in my comments, or theirs and I'll make sure the right people get it.

Enjoy!

P.S. Note the EntityFramework.SqlMigrations NuGet package's exposed version number. It's 0.5.10727.0. ;)

Limitations

This is Alpha, so read the Limitations section. They are putting out rough things like this because they know we want to see bits earlier, but the trade off is limitations. Here's a few. Read the list for the rest.

- There is no provider model, this release only targets SQL Server, including SQL Azure. SQL Compact and other providers are not supported. We are currently working through what the provider model should look like for migrations.

- Question: What do you think? How important is this?

- Migrations currently needs to run in full trust. This isn’t an issue when working inside of Visual Studio but if consuming the migrations assembly from custom code you may want to run in medium trust. We are looking at ways to support this in a later release.

- This release is only available via NuGet. As we support more scenarios such as team build and an ‘outside of Visual Studio’ command line experience we will also support more installation options.

- Scott: Limitation? That's lovely! ;)

- Downgrade is currently not supported. When generating custom scripts you will notice that the script is named ‘Up.sql’ but there is no corresponding ‘Down.sql’. We are planning to add downgrade functionality prior to RTM but it is not available in this release.

I'm glad we're getting migrations. It's been a missing LEGO piece for a while.

Related Links

- Code First Migrations: August 2011 CTP Released

- Please be sure to read the ‘Issues & Limitations’ section of the announcement post before using migrations. It's a 0.5 version. That's a Fuzzy Alpha, friends.

- Code First Migrations: Walkthrough of August 2011 CTP

- Comment in the Entity Framework Pre-Release Forum. They are listening intently.

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

Good to see EF Migrations go from 'build in a zip on Scott's laptop' to 'CTP' :-). Congrats to the team.

One question, what you did above was a dev scenario, what would a typical workflow be after the dev is all good to go?

In my enterprise environment, I have to handover my 'migration script (mish mash of DDL and DML)' to the release engineer. Rather he gets it's from source control apart for the latest code etc etc. Then goes ahead and does code deployment and runs the 'migration script'.

So does EF Migrations imply instead of SQL script I have powershell script? Or does the following

"...but if consuming the migrations assembly from custom code you may want to run in medium trust..."

imply we need to write bootstrapping code to run the migration script the first time?

Or is the entire 'production scenario' still open for debate?

update-database -Script -ConnectionString "SERVER=.\SQLEXPRESS;Database=PersonProd;Trusted_Connection=true;"

1 - They allow you to structure your database is a platform-agnostic way. Different DB platforms have different ways to write DM SQL - Migrations abstract that.

2 - They allow you to "version" your database using code. You can go up, down, and all around with the idea that Migrations allow you to step through time easily. This is especially helpful with deployment - when you need to add a column it's in your code and you deploy, and during deployment your DB (which is often a different platform than your test) is updated.

3 - Tooling is often a set of command line calls in the *nix world. Some people like it, others don't. If you want to change your DB around often it's a bit of a lengthy process if you're not familiar with the tooling.

4 - You can seed your database as well as execute additional calls inline. There's a special file in rails for this (db/seeds) but you can also work with your model directly inside the Migration file.

5 - Working in teams, you simply check in your migrations and the team members pull - then they can run on their machines keeping everyone in sync.

With respect to .NET - well we don't have most of these problems aside from the "versioning" idea. It is a fine thing to be able to write your DB commands in code and execute them over time - especially if you want those commands executed on the server.

But that's not what EF Migrations are offering. Which brings me around to my point and question: what problem is this solving? So far it seems that:

1 - It's SQL Only (this is a guess given the name, but I think it's a good one)

2 - It doesn't have a notion of versioning

3 - It doesn't work with "command" files - it's automatic.

4 - No deployment story.

It seems that at the outset it's removing the need for me to go and update my schema, then update my code. But doesn't CodeFirst already do that? It seems that this tool is simply giving me explicit control over that process in the command line. Which is neat - but VS offers a pretty stellar DB tool and I'm thinking a little copy/paste edit to my Table and Codefiles is probably easier than writing that change code you have here.

And yes, I know it's Alpha. And yes, I know the team wants feedback but ... well they said it themselves:

However, our efforts are somewhat sheltered from the ‘real world’ ...

This is just a tad confusing, unless of course there's more you can't say. And if that's the case, I'll wait to offer more thoughts :).

Your loving Douchebag, Rob

Between releases the database schema and data are continuously evolving. Say in v1 we add two columns to a contacts table, Address and Telephone, later on v1.1 we move that data from first table to a new table ContactInfo, and then we delete the two columns from Contacts table.

The db we have in production is in v0.9, now we want to deploy v1.1 to production, with this migration type, EF would only infer that two columns need to be deleted and one table created. So the middle step that fills data between the tables is lost!

We have developers with different db versions, that need to upgrade when merging with latest versions, test db filled with data, production systems that need to be upgraded. All with valuable data, that cannot be replaced or recreated.

This happened in my team, when we tested VS Database edition, and this proposed model is like that, schema comparing, not really a db migration system.

What I like about this CTP is it does some introspection on your models and can generate / output a migration script for you (or hit whatever you specify in the connection string argument). Being able to get that script so /easily/ means that deploying to production becomes less scary.

However, I would love to see something that is more of a combination of this CTP and the Rails way. Ideally, this might still do the clever introspection on your models, but create a new migration file each time it is run (this is a unique schema version of the db). Each migration file would be able to manipulate the DB "up" or "down" to a certain version.

Manatee does a great job as well as FluentMigrator (excellent example). This sort of syntax / DSL (fluent or not) could be generated and outputted (20110728207_change_person_model.sql) to a unique file by Migrating Magic Unicorns 0.5's special automigration/detection voodoo. The benefit would be a human readable syntax that the developer could easily override and change if needed, but still run something like "update-database" and have it execute all the migration files.

I think developers would really value being able to move between (up /and/ down) discrete schema versions at will and be able to tweak / modify the generated output, while still benefitting from Migrating Magic Unicorns's automagic detection of changes to your model.

Very cool start though :-)

However that output you showed above is scary. It should probably be a modify table that adds one column only. I guess it's just a quirk of the current setup? Will try it out tonight for myself.

@iCeClow Well, in the 0.9 to 1.1 scenario, the data updates will have to be modified to go from 0.9 to 1.1 instead of 0.9 to 1.0. That shouldn't be so difficult right? (I know hypothetically everything is easy ;-)...). From what I see above Migrations is more about schema changes than Data Migration. Or did I miss something?

1. If you have many changes, you can use -script to see exactly where the data loss will occur in the SQL. I had many changes and the console output didn't make it clear where my data loss would be.

2. I used Nuget in my project to get EntityFramework 4.1.1075 dependency; however, it defaulted to my global 4.1.0 installation and caused me a lot of headache to figure out I needed to update that as well.

I have an open-source project called Wizardby, which solves exactly the same problem of database versioning, migration and (to an extent) deployment. It features database-agnostic declaratibe DSL for specifying changes and it is indeed abstract: it supports all versions of SQL Server, SQLite and SQL Compact (other DBs can be supported as well, but I never got round to actually implementing this).

I love that feature to generate update scripts for production database.

When you change a one-many relationship to a many-many, there is a data loss error.

There must be a way to prevent data loss, and migrate the FKs to the newly created table.

As is said in another comment, this migration step approach comes from the rails world, where the figured long ago that schema comparing was not enough, to support development and production database migration. I want to be clear here, data is a very important part of a successful database migration, and should be taken into account, also in the development scenario. Also schema comparing only works from 1 version to another, when the db version is older than 1 version, the script is most likely going to fail.

So, when the PROD or Staging DB is in a DMZ, how exactly will the Nuget delivered migrations code talk to the DB.

Do you expect me to install Visual Studio on a machine in the DMZ and then open up the firewall to allow that machine to connect to a Nuget server?

I'm glad we're getting migrations. It's been a missing LEGO piece for a while.

I don't agree about hte LEGO piece. There are many migration libraries out there, and Microsoft should have adopted one, made it pluggable and then plugged it into EF. What we have here is not a LEGO piece, or if we must see it as a LEGO piece, it's one of those special pieces crafted for a specific LEGO model/box only.

Or am I missing something? Could we use this with NHibernate mapping for instance, or with our own SQL scripts?

so my feedback is: drop the EF name from this LEGO piece and make it so it's just "a library for database schema migrations" and release the EF 'glue' as a separate package.

Thanks for the post anyway

How would you run the migration to update the DB schema for the appharbor DB?

With Heroku it'd be something like

heroku rake db:migrate

It would be lovely if we could get something similar

The comments from iCeCloW seem to really sum up most of my concerns with how it “versions”. It seems like this is just a somewhat smarter “drop the database” setup that EF has baked in for development.

I’m starting to consider the implications of using EF 4.1 code first, (potentially with this package) for prototyping phases of projects. After the project hits a big enough milestone at this point to script the database out to sql creat e tables. Create a fluent migrator V1 from those scripts, and then replace usage of EF with a Micro ORM and then continue future revisions by hand.

It's essential. The DbProviderFactories are essential for generic database code. Abandoning that for specific, assembly loading code, will make ado.net provider writers not install the factory in machine.config anymore, which makes it completely useless. Now, when the EF requires it, they have to install it in the machine.config to make it available for all applications. This is a good thing, because it frees your code from references to specific versions of the ado.net provider dlls. Anyone who has ever worked with e.g. ODP.NET knows how painful this can be. the provider model solves all that.

As someone who's day job is all about data-access code and ado.net providers, I really don't want to go back to those days and can't think of a single reason why one would want to go back to that system.

http://social.msdn.microsoft.com/Forums/en-US/adonetefx/thread/70585239-9ad4-4ec7-be12-0ae60c285410

This is all great feedback and I wanted to touch on some of the common things that are coming up. My intention is purely to share what we have been thinking… not to try and convince you to drink the koolaid :) ... so your passionate feedback is welcomed…

Lack of a Provider Model: Absolutely hear you! Our team has been talking about support for other databases and have ideas on how this will all piece together but we didn’t implement them for the CTP. Your comments make it pretty clear this needs to be a priority.

Too Much Magic: We definitely don’t expect folks to just trust ‘auto-magic migrations’ all the time, in-fact there are some changes that just can’t be done automatically. The idea is that you can use ‘automatic upgrade’ when you are happy for the changes to be inferred and ‘custom scripts’ when you want to take control. You can of course just always use custom scripts and never let anything happen automatically (I’ll talk to the ‘raw SQL vs code’ point in a second). If you opt for the ‘everything is a custom script’ approach then you essentially get the Rails experience but the scripts are pre-populated based on how you changed your model. In the CTP you will also get some redundant Source.xml and Target.xml files in the Migrations directory but this is not required… we just didn’t have time to switch them off for the CTP.

SQL vs Code in Custom Scripts: We really wanted feedback on this… and we are getting it loud and clear! Sounds like folks pretty unanimously want to use custom scripts regularly and want them to be code based and provider independent. Our plans around the provider model actually open up some interesting possibilities here for plugging in various format options for defining a custom script. These could include SQL, an existing code based migration solutions or something purpose built.

Versioning???: We haven’t done a good job of talking about this so far... when you add a custom script to your project you essentially end up with a named version. It’s not in the CTP but we absolutely plan to allow upgrade/downgrade between these versions. And of course if you decided to opt for the ‘I don’t trust this magic stuff… everything is a custom script’ then every change ends up as a named version. If, however, you decide that magic is ok for you then you can still insert a ‘custom script’ that isn’t actually making any changes and you end up with a named version between a bunch of changes that are going to be inferred automatically.

Please keep the feedback coming and let me know if I missed the point on any of your concerns.

This seems primarily targeted to development scenarios. I would have though migration would be far more useful in non-development scenarios where you typically cannot afford to delete the database at every schema change (this is more likely to work during development). I am missing something along the lines of Database.MigrateSchema(), which could be called in code when it is detected that there is a schema mismatch. Is this something that is planned? Otherwise I fail to really see the point of this.

I tried to use EF Code First in a WPF client app for data storage, but I quickly backed of that idea due to the lack of migration support. I fail to see how this would help me here, unfortunately.

That's the point of this, you wouldn't turn on the drop/create option for the EF Code First in the Context but rather use the one that only creates it if it doesn't exist, e.g.

public class DBInitializer : CreateDatabaseIfNotExists<DBContextName>

In fact as extra assurance to avoid that possibility you can drop the EDMMetaData tracking table from the production database and it won't even try to update or drop the tables even if someone puts in the wrong setting in the DBInitializer.

Yes, that's clear. But I would still have to have created SQL scripts ahead of time during development and included them as resources and then run these scripts manually at run-time, if I understand things correctly. That seems an awful lot of work for simple scenarios - although it certainly should be an option for more complicated scenarios. But for simply adding a column or two this seems to be way too complex.

If you follow the development from scratch there is no need for you to have created SQL scripts. It will optionally generate scripts when are ready to deploy to staging or production if you prefer or are forced to do it that way. At each incremental step it will update the database without data loss when you ask it to update the database in the command line "update-database".

Update-Database : Could not load type 'System.Data.Entity.Infrastructure.DbContextInfo' from assembly 'EntityFramework, Version=4.1.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089'.

At line:1 char:16

+ update-database <<<<

+ CategoryInfo : NotSpecified: (:) [Update-Database], TypeLoadException

+ FullyQualifiedErrorId : System.TypeLoadException,System.Data.Entity.Migrations.Commands.MigrateCommand

Update-Database : Could not load type 'System.Data.Entity.Infrastructure.DbContextInfo' from assembly 'EntityFramework, Version=4.1.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089'.

Does anyone have any idea why I am geting this TypeLoadException? I tried to make a reference to DbContextInfo from within code and I get the same error. How one can proceed to debug this Type Loading error?

Thanks in advance for any hint

Do you have the Entity Framework June 2011 CTP tools installed? That installer has a publisher policy in it which forces System.Data.Entity.dll v4.2 to be loaded which also has the DbContext types in it, but not the DbContextInfo type. The Migrations CTP is not compatible with the June 2011 CTP with the other new EF features. To get it working, you'll need to remove the publisher policy dll from your GAC and restart VS.

Really I wonder why they didn't just take FluentMigrator and make a flavor of it that will build migrations for Models. But then I guess it wouldn't be EF Migrator. Seems silly to reinvent the wheel.

What shall I do?

Thanks!

+1 for MySQL support.

1. Create a DB using EF 4.1 model designer. Add some entities.

2. Create the database using the right-click "Generate..." from the designer. Run the SQL scripts on my SQL Azure server. Let's call this our "production database"

Now everything works great and the website is humming. Now:

3. Add a new scalar property to an entity in the DESIGNER.

4. Generate the scripts again.

Uh oh. Now how do I update the "production database" with the updated schema? OK, I can use Visual Studio's schema compare! Great! But wait... the generated Visual Studio SQL has way too much SQL garbage, and it errors on SQL Azure! So now I have to manually edit all of the SQL and remove the garbage so I'm left with the simple "ALTER" statements. I also now have to write some SQL by hand to update the data.

This all seems to work, but there must be an easier way?

- Guy approaching it all wrong

What I am suggesting is to use Database Project for version control and development and DB Data & Schema Comparison tool for Entity Framework Code-First for keeping entities model and db schema / data in sync.

Check this out: EntityFramework.SchemaCompare at GitHub

I have small problem with migration. Scenario is following:

1) One developer creates model on local PC and update it

2) Second developer setup local DB which based on updated models (so he does not have in System Tables any migration history, but DBs for all models created)

3) Second developer make changes in model and run following script

Update-Database -TargetDatabase SomeContext -ProjectName SomeProject -Verbose

As a result second developer has

Found 1 pending explicit migrations: [201112141258354_ConnectionMigration]. (Which not actually required because DB created from already updated models)

System.Data.SqlClient.SqlException (0x80131904): ALTER TABLE DROP COLUMN failed because column 'Ip' does not exist in table 'Contacts'.

at System.Data.SqlClient.SqlConnection.OnError(SqlException exception, Boolean breakConnection)

at System.Data.SqlClient.SqlInternalConnection.OnError(SqlException exception, Boolean breakConnection)

at System.Data.SqlClient.TdsParser.ThrowExceptionAndWarning()

at System.Data.SqlClient.TdsParser.Run(RunBehavior runBehavior, SqlCommand cmdHandler, SqlDataReader dataStream, BulkCopySimpleResultSet bulkCopyHandler, TdsParserStateObject stateObj)

at System.Data.SqlClient.SqlCommand.RunExecuteNonQueryTds(String methodName, Boolean async)

at System.Data.SqlClient.SqlCommand.InternalExecuteNonQuery(DbAsyncResult result, String methodName, Boolean sendToPipe)

at System.Data.SqlClient.SqlCommand.ExecuteNonQuery()

at System.Data.Entity.Migrations.DbMigrator.ExecuteSql(DbTransaction transaction, MigrationStatement migrationStatement)

at System.Data.Entity.Migrations.Infrastructure.MigratorLoggingDecorator.ExecuteSql(DbTransaction transaction, MigrationStatement migrationStatement)

at System.Data.Entity.Migrations.DbMigrator.ExecuteStatements(IEnumerable`1 migrationStatements)

at System.Data.Entity.Migrations.Infrastructure.MigratorBase.ExecuteStatements(IEnumerable`1 migrationStatements)

at System.Data.Entity.Migrations.DbMigrator.ExecuteOperations(String migrationId, XDocument targetModel, IList`1 operations, Boolean downgrading)

at System.Data.Entity.Migrations.DbMigrator.ApplyMigration(DbMigration migration, DbMigration lastMigration)

at System.Data.Entity.Migrations.Infrastructure.MigratorLoggingDecorator.ApplyMigration(DbMigration migration, DbMigration lastMigration)

at System.Data.Entity.Migrations.DbMigrator.Upgrade(IEnumerable`1 pendingMigrations, String targetMigrationId, String lastMigrationId)

at System.Data.Entity.Migrations.Infrastructure.MigratorLoggingDecorator.Upgrade(IEnumerable`1 pendingMigrations, String targetMigrationId, String lastMigrationId)

at System.Data.Entity.Migrations.DbMigrator.Update(String targetMigration)

at System.Data.Entity.Migrations.Infrastructure.MigratorBase.Update(String targetMigration)

at System.Data.Entity.Migrations.UpdateDatabaseCommand.BeginProcessing(MigratorBase migrator)

at System.Data.Entity.Migrations.BaseMigratorCommand.BeginProcessingCore()

I tried following this demo (assuming the general theme would be the same), but can't get Update-Database to work--PM keeps saying its an unknown command/commandlet.

Thanks and love the content you have here!

Comments are closed.

_thumb.png)

_thumb.png)

It handles most scenarios very nicely, even those that are blocked by any other Microsoft database versioning solution because "data loss" may occur. In FluentMigrator the developer is responsible to handle "data loss", and doing so is easy thanks to the syntax.

Also, when a custom script is need or some change is beyond the fluent syntax, a way to executing custom scripts is provided. This case, that at first may seem strange, is very common in many projects: updating a database view, making sure it is in sync with latest model changes, creating or altering stored procedures, or altering computed columns are real world scenarios that happen in projects of any size.