How do we know if mobile apps are secure?

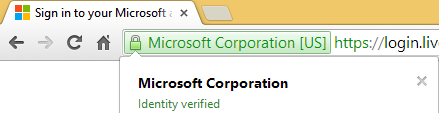

You know how we're always telling out non-technical non-gender-specific spouses and parents to be safe and careful online? You know how we teach non-technical friends about the little lock in the browser and making sure that their bank's little lock turned green?

HTTPS & SSL doesn't mean "trust this." It means "this is private." You may be having a private conversation with Satan.

— Scott Hanselman (@shanselman) April 4, 2012 Well, we know that HTTPS and SSL don't imply trust, they imply (some) privacy. But we have some cues, at least, and after many years while a good trustable UI isn't there, at least web browsers TRY to expose information for technical security decisions. Plus, bad guys can't spell.

But what about mobile apps?

I download a new 99 cent app and perhaps it wants a name and password. What standard UI is there to assure me that the transmission is secure? Do I just assume?

What about my big reliable secure bank? Their banking app is secure, right? If they use SSL, that's cool, right? Well, are they sure who they are talking too?

OActive Labs researcher Ariel Sanchez tested 40 mobile banking apps from the "top 60 most influential banks in the world."

40% of the audited apps did not validate the authenticity of SSL certificates presented. This makes them susceptible to man-in-the-middle (MiTM) attacks.

Many of the apps (90%) contained several non-SSL links throughout the application. This allows an attacker to intercept the traffic and inject arbitrary JavaScript/HTML code in an attempt to create a fake login prompt or similar scam.

If I use an app to log into another service, what assurance is there that they aren't storing my password in cleartext?

It is easy to make mistakes such as storing user data (passwords/usernames) incorrectly on the device, in the vast majority of cases credentials get stored either unencrypted or have been encoded using methods such as base64 encoding (or others) and are rather trivial to reverse,” says Andy Swift, mobile security researcher from penetration testing firm Hut3.

I mean, if Starbucks developers can't get it right (they stored your password in the clear, on your device) then how can some random Jane or Joe Developer? What about cleartext transmission?

"This mistake extends to sending data too, if developers rely on the device too much it becomes quite easy to forget altogether about the transmission of the data. Such data can be easily extracted and may include authentication tokens, raw authentication data or personal data. At the end of the day if not investigated, the end user has no idea what data the application is accessing and sending to a server somewhere." - Andy Swift

I think that it's time for operating systems and SDKs to start imposing much more stringent best practices. Perhaps we really do need to move to an HTTPS Everywhere Internet as the Electronic Frontier Foundation suggests.

Transmission security doesn't mean that bad actors and malware can't find their way into App Stores, however. Researchers have been able to develop, submit, and have approved bad apps in the iOS App Store. I'm sure other stores have the same problems.

The NSA has a 37 page guide on how to secure your (iOS5) mobile device if you're an NSA employee, and it mostly consists of two things: Check "secure or SSL" for everything and disable everything else.

What do you think? Should App Stores put locks or certification badges on "secure apps" or apps that have passed a special review? Should a mobile OS impose a sandbox and reject outgoing non-SSL traffic for a certain class of apps? Is it too hard to code up SSL validation checks? '

Whose problem is this? I'm pretty sure it's not my Dad's.

Sponsor: Big thanks to Red Gate for sponsoring the blog feed this week! Easy release management: Deploy your SQL Server databases in a single, repeatable process with Red Gate’s Deployment Manager. There’s a free Starter edition, so get started now!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

My rule of thumb is to never use the same password across the different mobile apps & websites. For more sensible apps like my bank account, I have 2 factor auth setup which is the only reason why I would accept to use it in the first place.

I agree though, this is a super important problem that needs to be solved.

That still doesn't stop developers to store passwords in cleartext on a device, but that's a slightly different problem. Browser apps for the desktop might also be storing passwords in plaintext in e.g. local storage. There is no security in the green lock icon there either.

If the app encrypts the password, it will need to keep the key somewhere on the device.

If a manifest says the app will only access *.microsoft.com and I trust Microsoft, then I will install the application.

As a user, I don't like that the app I'm installing is going to be sending whatever information to any host that I don't know of. Locking it down would be a huge benefit to both consumers and serious producers of apps.

Anyone have any pointers on how to do this correctly?

Or, more simply, just give people a setting to disallow all non-SSL network traffic from apps (and possibly even make it "on" by default).

(Continuing @Everybody now...)

However, maybe that's what's needed. If apps could store their secure data in something that's tamper proof.

WILD SPECULATIVE EXAMPLE: Like maybe apps could use a tiny, separate bit of RAM that's kept alive all the time whether the phone is on or not, (which would thus zero out when voltage is no longer applied) and then, somehow, only grant right to access portions of that RAM to particular apps... like maybe by calculating a cryptographic signature of the first or last 10K instructions of the App's instruction set and using that for authenticating the app to get access to that piece of RAM or something...

I suspect for most applications, building this kind of thing into the hardware (or utilizing similar hardware if it already exists!) would be overkill. Security is only as good as it's weakest link, and focusing too much on technical aspects is sure to keep us from seeing more obvious attack points.

The problem with making too stringent of requirements of apps to meet is that these requirements may just give incentive create a monoculture/framework to secure data, where everybody is doing the same thing. When everybody starts doing the same thing to secure data, that gives incentive for attackers to attack that framework to find weaknesses, because once a weakness is found, that weakness could be used to attack everybody. My made-up example above is a prime example of something that could fall to this kind of attack and fail.

Certainly computers can be kept relatively secure if they "do the same thing", but that doesn't mean my statements are without merit; In every Linux, Mac, Android, or Windows machine of a common kind you have the same potential weaknesses. Over the years, the security designs generally utilized have changed due to different kinds of attack becoming readily available and certain evolving designs proving more useful.

Heterogeneity is your friend.

Also, app developers may certainly see the flaws of a particular design but, due to corporate pressures, aren't in any position to do anything about that design, even if it violates recommended guidelines... or for that matter, laws.

It would not be that all involved intentionally would violate law; it generally would be the case that those that have the power to require changes in the design might have a rather *lenient* interpretation of law, or would otherwise convince themselves they aren't in violation of them regardless of what is actually written.

Honestly, given the way many laws are written, I wouldn't blame them.

We don't need or want everybody to do the same thing. We just need everybody to avoid doing stupid things. (This also sums up my whole philosophy about life.)

I agree that any other online communication should be over SSL etc. Thanks

How would the app use the password to sign into the server next time you open it once it's hashed?

You know how we're always telling out non-technical.....

....our non-technical.....

</Grammar Police>

I think this is ultimately an Appstore responsibility. When we shifted from "you can install anything you like" to "You can only install stuff from our appstore" customers intrinsicly expect that only "well behaved" apps will be allowed in the store - and why wouldn't they?

Asking a user if an app can have permission to x,y and z is pointless. 99% of users don't even read the box, it's just one more button to click before they get their app installed.

So, till there I think would be a lot easier to just not use apps when sensitive information would be use.

For sure, I really would not use banks apps or any that would involve money... especially my pennies!

Also, I agree with Tudor: it seems that people in US (or people in the US conurbations) tend to think that their technological/network reality is widespread. On several places/countries people would use a mobile device in order to use WiFi in the first place, in order to not pay the expensive data plans or just because there is just not other way to get connected.

It would really be a good marketing practice for companies that deal on sensitive information to show to the user that they take security seriously and place it front and center.

Anyone know if PCI compliance looks at mobile applications. They audit websites and back-end systems but what about mobile apps?

Thanks,

Pedro.

Comments are closed.