Virtual Machine CPU Performance

In the last post on Virtual Machine Performance Tips I said, here are some realistic goals for your Guest OS (VM) performance, that I originally got from J. Sawyer at Microsoft:

- Ideally Virtual PC performance is at:

- CPU: 96-97% of host

- Network: 70-90% of host

- Disk: 40-70% of host

In the comments Vincent Evans said:

From personal experience with VM (running in MS Virtual Server) - i have grave doubts about your claim of VM CPU performance approaching anywhere near 90% of native.

Can you put more substance behind that claim and post a CPU benchmark of your native server vs. vm running on that server? For example i used a popular prime number benchmark (can't remember the name, wprime maybe? not sure.) and my numbers were more like 70% of native.

I agreed, so I took a minute during lunch and ran a few tests. For the test I used the Freely Available IE6 WindowsXP+SP2 Test Virtual Machine Image along with the Free Virtual PC 2007 and Virtual Server 2005 R2 as well.

These are neither scientific, nor are they rigorous. They are exactly what they claim to me. They are me running some tests during lunch, so take them as such. I encourage those of you who care more deeply than I to run your own tests and let me know why these results either suck, or are awesome.

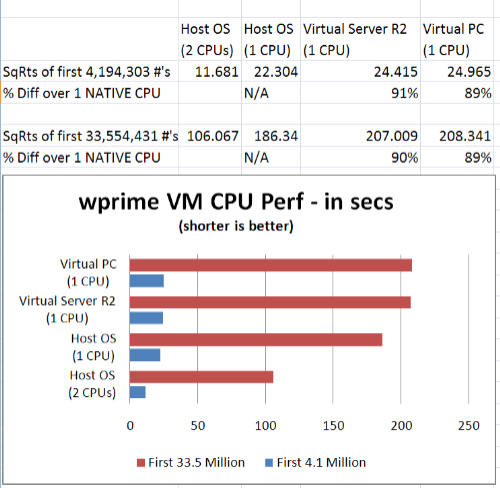

I used wprime to calculate the square roots of the first 4,194,303 numbers. Wprime can spin up multiple threads, and this was significant because my system has two processors, so you'll see what kind of a difference this made in the tests.

Both Virtual PC and Virtual Server only let the Guest OS use one of the processors, so I did the tests on the Host OS with one, then two processors, to make sure the difference is clear.

My Hardware (as seen by wprime from the Host OS)

>refhw

CPU Found: CPU0

Name: Intel(R) Core(TM)2 CPU T7600 @ 2.33GHz

Speed: 2326 MHz

L2 Cache: 4096 KB

CPU Found: CPU1

Name: Intel(R) Core(TM)2 CPU T7600 @ 2.33GHz

Speed: 2326 MHz

L2 Cache: 4096 KB

Results

Looks like for both tests a VM's CPU, when stressed, runs at just about 90% of the speed of the Host OS, which is lower than the Goal of 96-97% I printed earlier. Tomorrow I'll update this post by rebooting and going into the BIOS and turning off my system's Hardware Assisted Virtualization and seeing if that makes a difference. If the results are lower (I assume they are) then that'll just confirm that VT Technology is useful - I assume that's a fair assumption.

You can try these tests yourself on your own machines using wprime. Just make sure you tell wprime how many threads to use in your Host OS, depending on your number of processors. Thanks to Vincent for encouraging the further examination!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

My Hardware

refhw

CPU Found: CPU0

Name: Intel(R) Core(TM)2 CPU T7200 @ 2.00GHz

Speed: 1995 MHz

L2 Cache: 4096 KB

CPU Found: CPU1

Name: Intel(R) Core(TM)2 CPU T7200 @ 2.00GHz

Speed: 1995 MHz

L2 Cache: 4096 KB

4M test:

Host OS, 2 CPUs: 8.453s

Host OS, 1 CPU: 16.230s

VMWare Server, 1 CPU: 16.947s (95.8%)

32M test:

Host OS, 2 CPUs: 67.507s

Host OS, 1 CPU: 128.512s

VMWare Server, 1 CPU: 135.396s (94.9%)

I do have VT enabled in the BIOS. I do all of my development work in the virtual machine, so it's nice to see it can utilize most of a single CPU.

I'll add my anecdotal evidence. I've converted a few Virtual PC VM's to VMWare format and I haven't seen any degradation in performance. That is, they seem to run about as fast as they did under Virtual PC/Server. This is w/o any hardware virtualization assistance.

Scott H:

I believe that the XP SP2 w/IE images come with the pagefile disabled. Did you enable it before running your test?

@wgaben - Nice stuff! I'll check out VMWare.

Core 2 Duo ~ 3 GHz

wPrime, 3 million, 1 thread

Host machine (dual core):

7.5 seconds

Virtual PC 2007 Windows XP machine:

8.1 seconds

** So it's 92% as fast **

The one variable here is that the VM emulates a single core machine, whereas the host is dual core, so that might artificially inflate the host results. I'd have to test on a true single core machine to say with 100% certainty.

I am surprised that your numbers are so high. Admittedly it been a little while since i've done benchmarks, and confronted by your evidence (and what others have posted) i am going through my memory trying to figure out where i got the 70% number from, but i can't be sure.

Very interesting.

Here's another angle - i have a pretty fast dualcore box that is running SQL2005 inside a virtual environment, and it's alot slower than a similar box running SQL2005 natively (same database in both cases) - i can come up with whole bunch of reasons why that may be (native box has 2GB and virtual box has 512) - but the difference is that the virtual box is about 50% slower.

I don't know what this "evidence" really reveals, except to say that despite claims to the contrary - you can have a somewhat underwhelming performance out of a virtual environment too. But if this is your goal - i don't really have the recipe, except to say that it can be done, and i've done it :-)

I'll look into that subject more, since virtual computing is something that i am interested in and have found increasing use for lately.

While i have observed increases when moving more powerful hardware, it fell short of being a factor of 2.

Thanks for your blog, i enjoy it.

Having said that, I have never tried turning the multiprocessor switch on. I have a few dozen virtual machines (one or more for each client/project - I am a consultant), and they were all created from the same baseline operating system image, which was installed with the uniprocessor kernel.

My personal anecdotal evidence has been that VMWare outperforms VPC in apples to apples comparisons hands-down. VPC 2007 closes the gap considerably with VMW 5.5, but VMW 6 re-established vmware as a clear performance leader again. And I do a LOT of work inside virtual environments - my policy is no project development on the metal except for the rare cases where I need to work out UI problems under Vista Aero (WPC and VMW still do not provide an experience score above 1.0, and therefore the DWM will not run inside a virtual machine).

The system will boot from a standard 7200RPM drive with page files disabled.

The real question is what is the best (and CHEAPEST) approach with respect to storage for multiple concurrent VM access in a single box with mirroring for data and at least 120GB data storage for VMs.

So far I've come up with:

2x150GB Raptors in RAID1

1x150GB Raptor + 1x320GB 7200RPM - my guess is raptor speed read performance but slower than 7200RPM write performance?

2x320GB 7200RPMs

4x320GB 7200RPMs in RAID10

3x320GB 7200RPMs in RAID5

Does anyone have experience with commodity hardware in this style environment? Should i be considering USB2.0 or Firewire external HDs (even the RAID1 NAS boxes)?

Comments are closed.

We have a bunch of Dell 1855 and 1955 blades that hosted our internal web application and external ecommerce applications and these were the first applications that I wanted to address.

My plan was a two-phase approach.

Phase-1 -- I needed to get all my servers converted to a VM environment.

Where required, I performed a hardware upgrade from 2GB to 4GB of RAM. Upgraded from 36GB internal disks to 300GB internal disk (more space is better for VMs, backups, etc.)

Since many of our servers needed to be upgraded, I have rebuilt over 50 servers during my VM project. Initially, I used VM Workstation 5.5 for many of our hosts. Soon thereafter, I upgraded to the free VM Server. Many of our web applications that had a host baseline of 50% CPU went up to 80-90% CPU after converting to VM. Only one VM per box during my migration... to keep extraneous server activity to a minimum plus allow me to fall back to my original hardware, if problems occurred.

The bottom line is that my existing hardware could handle the same amount of work, although it was still dedicated to the application it was running on. However, it did afford me greater flexability, because I could then easily clone images prior to software upgrades, plus have an image for disaster recovery purposes.

The conversion wasn't painless and I had to do a lot of this cloak and dagger style. Anytime there was an application issue, most folks would immediately blame VMware for my problem!!! I eventually stopped telling people what kind of hardware and configuration a particular server had.

Phase-2 -- Upgrade to ESX!

Now that I had our entire data center virtual, it was much easier for me to convince management that upgrading from VM Server to ESX would provide us even better benefits!

1) Better Performance

2) Better utilization of hardware

3) Hardware load balancing

4) Hardware failover

5) Centralized VM management

Microsoft really can't touch ESX in this regard. What I like the most is, that the hardware load balancing is totally automatic and will migrate VMs (without any interruption of service) to another box it determines it will run better. Also having the auto-starting of VMs on other hardware if the source hardware fails, is another great benefit!

I ended up using 5 of our Dell 1955 with 16GB of RAM and utilizing our SAN, and performance is the different between night and day from VM Server environment.

Many of our sister data centers are strictly against virtualizing any of our core applications due to fear of poor performance and scalability.

I have tried Virtual PC 2005 and 2007 and have not been impressed with the performance.

VM Workstation 6 seems to run much better -- plus provides a lot of additional benefits, such as Snapshots, dual monitor support, etc.