Hello Dear Reader. You may feel free to add a comment at the bottom of this post, something like "Um, DUH!" after reading this. It's funny how one gets lazy with their own website. What's the old joke, "those who can't, teach." I show folks how to optimize their websites all the time but never got around to optimizing my own.

It's important (and useful!) to send as few bytes of CSS and JS and HTML markup down the wire as possible. It's not just about size, though, it's also about the number of requests to get the bits. In fact, that's often more of a problem then file size.

First, go run YSlow on your site.

YSlow such a wonderful tool and it will totally ruin your day and make you feel horrible about yourself and your site. ;) But you can work through that. Eek. First, my images are huge. I've also got 184k of JS, 21k of CSS and 30k of markup. Note my favicon is small. It was LOT bigger before and even sucked up gigabytes of bandwidth a few years back.

YSlow also tells me that I am making folks make too many HTTP requests:

This page has 33 external JavaScript scripts. Try combining them into one.

This page has 5 external stylesheets. Try combining them into one.

Seems that speeding things up is not just about making things smaller, but also asking for fewer things and getting more for the asking. I want to make fewer request that may have larger payloads, but then those payloads will be minified and then compressed with GZip.

Optimize, Minify, Squish and GZip your CSS and JavaScript

CSS can look like this:

body {

line-height: 1;

}

ol, ul {

list-style: none;

}

blockquote, q {

quotes: none;

}

Or like this, and it still works.

body{line-height:1}ol,ul{list-style:none}blockquote,q{quotes:none}

There's lots of ways to "minify" CSS and JavaScript, and fortunately you don't need to care! Think about CSS/JS minifying kind of like the Great Zip File Wars of the early nineties. There's a lot of different choices, they are all within a few percentage points of each other, and everyone thinks theirs is the best one ever.

There are JavaScript specific compressors. You run your code through these before you put your site live.

And there are CSS compressors:

And some of these integrate nicely into your development workflow. You can put them in your build files, or minify things on the fly.

- YUICompressor - .NET Port that can compress on the fly or at build time. Also on NuGet.

- AjaxMin - Has MSBuild tasks and can be integrated into your project's build.

- SquishIt - Used at runtime in your ASP.NET applications' views and does magic at runtime.

- UPDATE: Chirpy - "Mashes, minifies, and validates your javascript, stylesheet, and dotless files."

- UPDATE: Combres - ".NET library which enables minification, compression, combination, and caching of JavaScript and CSS resources for ASP.NET and ASP.NET MVC web applications."

- UPDATE: Cassette by Andrew Davey - Does it all, compiles CoffeeScript, script combining, smart about debug- and release-time.

There's plenty of comparisons out there looking at the different choices. Ultimately when compression percentages don't matter much, you should focus on two things:

- compatibility - does it break your CSS? It should never do this

- workflow - does it fit into your life and how you work?

For me, I have a template language in my blog and I need to compress my CSS and JS when I deploy my new template. A batch file and command line utility works nicely so I used AjaxMin (yes, it's made by Microsoft, but it did exactly what I needed.)

I created a simple batch file that took the pile of JS from the top of my blog and the pile from the bottom and created a .header.js and a .footer.js. I also squished all the CSS, including my plugins that needed CSS, and put them in one file while being sure to maintain file order.

I've split these lines up for readability only.

set PATH=%~dp0;"C:\Program Files (x86)\Microsoft\Microsoft Ajax Minifier\"

ajaxmin -clobber

scripts\openid.css

scripts\syntaxhighlighter_3.0.83\styles\shCore.css

scripts\syntaxhighlighter_3.0.83\styles\shThemeDefault.css

scripts\fancybox\jquery.fancybox-1.3.4.css

themes\Hanselman\css\screenv5.css

-o css\hanselman.v5.min.css

ajaxmin -clobber

themes/Hanselman/scripts/activatePlaceholders.js

themes/Hanselman/scripts/convertListToSelect.js

scripts/fancybox/jquery.fancybox-1.3.4.pack.js

-o scripts\hanselman.header.v4.min.js

ajaxmin -clobber

scripts/omni_external_blogs_v2.js

scripts/syntaxhighlighter_3.0.83/scripts/shCore.js

scripts/syntaxhighlighter_3.0.83/scripts/shLegacy.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushCSharp.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushPowershell.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushXml.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushCpp.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushJScript.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushCss.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushRuby.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushVb.js

scripts/syntaxhighlighter_3.0.83/scripts/shBrushPython.js

scripts/twitterbloggerv2.js scripts/ga_social_tracker.js

-o scripts\hanselman.footer.v4.min.js

pause

All those ones at the bottom support my code highlighter. This looks complex ,but it's just making three files, two JS and a CSS out of all my mess of required JS files.

This squished all my CSS down to 26k, and here's the output:

CSS

Original Size: 35667 bytes; reduced size: 26537 bytes (25.6% minification)

Gzip of output approximately 5823 bytes (78.1% compression)

JS

Original Size: 83505 bytes; reduced size: 64515 bytes (22.7% minification)

Gzip of output approximately 34415 bytes (46.7% compression)

That also turned 22 HTTP requests into 3.

Optimize your Images (particularly PNGs)

Looks like my 1600k cold (machine not cached) home page is mostly images, about 1300k. That's because I put a lot of articles on the home page but I also use PNGs for images most of my blog posts. I could be more thoughtful and:

- Use JPEGs for photos of people, things that are visually "busy"

- Use PNGs for charts, screenshots, things that must be "crystal clear"

I can also optimize the size of my PNGs (did you know you can do that!) before I upload them with PNGOUT. For bloggers and for ease I recommend PNGGauntlet, which is a Windows app that calls PNGOut for you. Easier than PowerShell, although I do that also.

If you use Visual Studio 2010, you can use Mad's Beta Image Optimizer Extension that will let you optimize images directly from Visual Studio.

To show you how useful this is, I downloaded the images from the last month or so of posts on this blog totaling 7.29MB and then ran them through PNGOut via PNGGauntlet.

_thumb.png)

Then I took a few of the PNGs that were too large and saved them as JPGs. All in all, I saved 1359k (that's almost a meg and a half or almost 20%) for minimal extra work.

If you think this kind of optimization is a bad idea, or boring or a waste of time, think about the multipliers. You're saving (or I am) a meg and a half of image downloads, thousands of times. When you're dead and gone your blog will still be saving bytes for your readers! ;)

This is important not just because saving bandwidth is nice, but because perception of speed is important. Give the browser less work to do, especially if, like me, almost 10% of your users are mobile. Don't make these little phones work harder than they need to and remember that not everyone has an unlimited data plan.

Let your browser cache everything, forever

Mads reminded me about this great tip for IIS7 that tells the webserver to set the "Expires" header to a far future date, effectively telling the browser to cache things forever. What's nice about this is that if you or your host is using IIS7, you can change this setting yourself from web.config and don't need to touch IIS settings.

<staticContent>

<clientCache httpExpires="Sun, 29 Mar 2020 00:00:00 GMT" cacheControlMode="UseExpires" />

</staticContent>

You might think this is insane. This is, in fact, insane. Insane like a fox. I built the website so I want control. I version my CSS and JS files in the filename. Others use QueryStrings with versions and some use hashes. The point is are YOU in control or are you just letting caching happen? Even if you don't use this tip, know how and why things are cached and how you can control it.

Compress everything

Make sure everything is GZip'ed as it goes out of your Web Server. This is also easy with IIS7 and allowed me to get rid of some old 3rd party libraries. All these settings are in system.webServer.

<urlCompression doDynamicCompression="true" doStaticCompression="true" dynamicCompressionBeforeCache="true"/>

If there is one thing you can do to your website, it's turning on HTTP compression. For average pages, like my 100k of HTML, it can turn into 20k. It downloads faster and the perception of speed by the user from "click to render" will increase.

Certainly this post just scratches the surface of REAL performance optimization and only goes up to the point where the bits hit the browser. You can go nuts trying to get an "A" grade in YSlow, optimizing for # of DOM objects, DNS Lookups, JavaScript ordering, and on and on.

That said, you can get 80% of the benefit for 5% of the effort by these tips. It'll take you no time and you'll reap the benefits hourly:

- Minifying your JS and CSS

- Combining CSS and JS into single files to minimize HTTP requests

- Turning on Gzip Compression for as much as you can

- Set Expires Headers on everything you can

- Compress your PNGs and JPEGs (but definitely your PNGs)

- Use CSS Sprites if you have lots of tiny images

I still have a "D" on YSlow, but a D is a passing grade. ;) Enjoy, and leave your tips, and your "duh!" in the comments, Dear Reader.

Also, read anything by Steve Souders. He wrote YSlow.

Hosting By

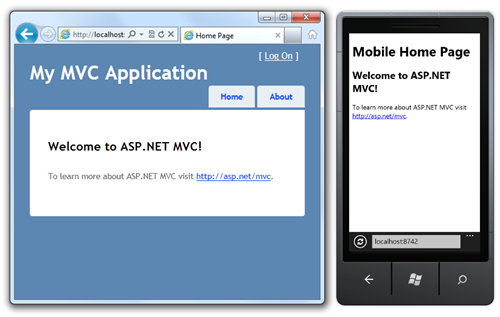

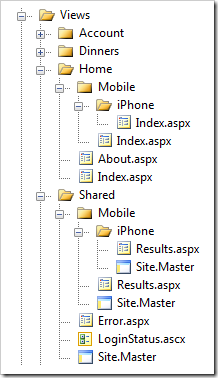

I did some basic mobile view engine work for ASP.NET MVC for Mix in 2009 and then created what I thought was a better ASP.NET MVC Mobile ViewEngine in 2010. Unfortunately, the second one (the "better" one) had a caching bug that only showed itself in Release mode. This last month, Jon, John, Peter and I updated NerdDinner to MVC 3 with Razor and a pile of other new features. One of those new features was jQuery Mobile support and that meant we need to fix this bad Mobile View Engine. Additionally, ASP.NET MVC 4 will include actual supported Mobile Views support, so the pressure was on.

I did some basic mobile view engine work for ASP.NET MVC for Mix in 2009 and then created what I thought was a better ASP.NET MVC Mobile ViewEngine in 2010. Unfortunately, the second one (the "better" one) had a caching bug that only showed itself in Release mode. This last month, Jon, John, Peter and I updated NerdDinner to MVC 3 with Razor and a pile of other new features. One of those new features was jQuery Mobile support and that meant we need to fix this bad Mobile View Engine. Additionally, ASP.NET MVC 4 will include actual supported Mobile Views support, so the pressure was on.

_thumb.png)

_thumb.png)

_thumb.png)