Back to Basics: Big O notation issues with older .NET code and improving for loops with LINQ deferred execution

Earlier today Brad Wilson and I were discussing a G+ post by Jostein Kjønigsen where he says, "see if you can spot the O(n^2) bug in this code."

public IEnumerable<Process> GetProcessesForSession(string processName, int sessionId)

{

var processes = Process.GetProcessByName(processName);

var filtered = from p in processes

where p.SessionId == sessionId

select p;

return filtered;

}

This is a pretty straightforward method that calls a .NET BCL (Base Class Library) method and filters the result with LINQ. Of course, when any function calls another one that you can't see inside (which is basically always) you've lost control. We have no idea what's going on in GetProcessesByName.

Let's look at the source to the .NET Framework method in Reflector. Our method calls Process.GetProcessesByName(string).

public static Process[] GetProcessesByName(string processName)

{

return GetProcessesByName(processName, ".");

}

Looks like this one is an overload that passes "." into the next method Process.GetProcessesByName(string, string) where the second parameter is the machineName.

This next one gets all the processes for a machine (in our case, the local machine) then spins through them doing a compare on each one in order to build a result array to return up the chain.

public static Process[] GetProcessesByName(string processName, string machineName)

{

if (processName == null)

{

processName = string.Empty;

}

Process[] processes = GetProcesses(machineName);

ArrayList list = new ArrayList();

for (int i = 0; i < processes.Length; i++)

{

if (string.Equals(processName, processes[i].ProcessName, StringComparison.OrdinalIgnoreCase))

{

list.Add(processes[i]);

}

}

Process[] array = new Process[list.Count];

list.CopyTo(array, 0);

return array;

}

if we look inside GetProcesses(string), it's another loop. This is getting close to where .NET calls Win32 and as these classes are internal there's not much I can do to fix this function other than totally rewrite the internal implementation. However, I think I've illustrated that we've got at least two loops here, and more likely three or four.

public static Process[] GetProcesses(string machineName)

{

bool isRemoteMachine = ProcessManager.IsRemoteMachine(machineName);

ProcessInfo[] processInfos = ProcessManager.GetProcessInfos(machineName);

Process[] processArray = new Process[processInfos.Length];

for (int i = 0; i < processInfos.Length; i++)

{

ProcessInfo processInfo = processInfos[i];

processArray[i] = new Process(machineName, isRemoteMachine, processInfo.processId, processInfo);

}

return processArray;

}

This code is really typical of .NET circa 2002-2003 (not to mention Java, C++ and Pascal). Functions return arrays of stuff and other functions higher up filter and sort.

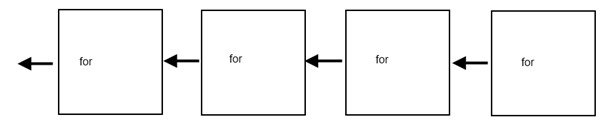

When using this .NET API and for looping over the results several times, I'm going for(), for(), for() in a chain, like O(4n) here.

Note: To be clear, it can be argued that O(4n) is just O(n), cause it is. Adding a number like I am isn't part of the O notation. I'm just saying we want to avoid O(cn) situations where c is a large enough number to affect perf.

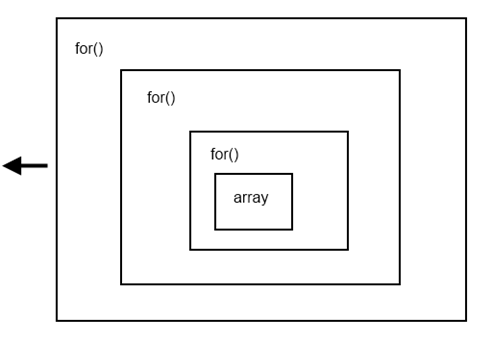

Sometimes you'll see nested for()s like this, so O(n^3) here where things get messy fast.

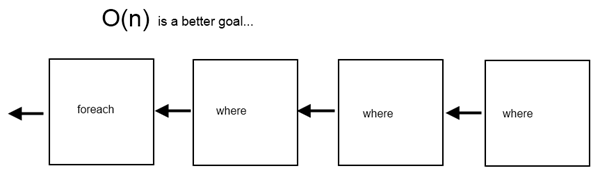

LINQ is more significant than people really realize, I think. When it first came out some folks said "is that all?" I think that's unfortunate. LINQ and the concept of "deferred execution" is just so powerful but I think a number of .NET programmers just haven't taken the time to get their heads around the concept.

Here's a simple example juxtaposing spinning through a list vs. using yield. The array version is doing all the work up front, while the yield version can calculate. Imagine a GetFibonacci() method. A yield version could calculate values "just in time" and yield them, while an array version would have to pre-calculate and pre-allocate.

public void Consumer()

{

foreach (int i in IntegersList()) {

Console.WriteLine(i.ToString());

}

foreach (int i in IntegersYield()) {

Console.WriteLine(i.ToString());

}

}

public IEnumerable<int> IntegersYield()

{

yield return 1;

yield return 2;

yield return 4;

yield return 8;

yield return 16;

yield return 16777216;

}

public IEnumerable<int> IntegersList()

{

return new int[] { 1, 2, 4, 8, 16, 16777216 };

}

Back to our GetProcess example. There's two issues at play here.

First, the underlying implementation where GetProcessesInfos eventually gets called is a bummer but it's that way because of how P/Invoke works and how the underlying Win32 API returns the data we need. It would certainly be nice if the underlying API was more granular. But that's less interesting to me than the larger meta-issue of a having (or in this case, not having) a LINQ-friendly API.

The second and more interesting issue (in my option) is the idea that the 2002-era .NET Base Class Library isn't really setup for LINQ-friendliness. None of the APIs return LINQ-friendly stuff or IEnumerable<anything> so that when you change together filters and filters of filters of arrays you end up with O(cn) issues as opposed to nice deferred LINQ chains.

When you find yourself returning arrays of arrays of arrays of other stuff while looping and filtering and sorting, you'll want to be aware of what's going on and consider that you might be looping inefficiently and it might be time for LINQ and deferred execution.

Here's a simple conversion attempt to change the first implementation from this classic "Array/List" style:

ArrayList list = new ArrayList();

for (int i = 0; i < processes.Length; i++)

{

if (string.Equals(processName, processes[i].ProcessName, StringComparison.OrdinalIgnoreCase))

{

list.Add(processes[i]);

}

}

Process[] array = new Process[list.Count];

list.CopyTo(array, 0);

return array;

To this more LINQy way. Note that returning from a LINQ query defers execution as LINQ is chainable. We want to assemble a chain of sorting and filtering operations and execute them ONCE rather than for()ing over many lists many times.

if (processName == null) { processName = string.Empty; }

Process[] processes = Process.GetProcesses(machineName); //stop here...can't go farther?

return from p in processes

where String.Equals(p.ProcessName, processName, StringComparison.OrdinalIgnoreCase)

select p; //the value of the LINQ expression being returned is an IEnumerable<Process> object that uses "yield return" under the hood

Here's the whole thing in a sample program.

static void Main(string[] args)

{

var myList = GetProcessesForSession("chrome.exe", 1);

}

public static IEnumerable<Process> GetProcessesForSession(string processName, int sessionID)

{

//var processes = Process.GetProcessesByName(processName);

var processes = HanselGetProcessesByName(processName); //my LINQy implementation

var filtered = from p in processes

where p.SessionId == sessionID

select p;

return filtered;

}

private static IEnumerable<Process> HanselGetProcessesByName(string processName)

{

return HanselGetProcessesByName(processName, ".");

}

private static IEnumerable<Process> HanselGetProcessesByName(string processName, string machineName)

{

if (processName == null)

{

processName = string.Empty;

}

Process[] processes = Process.GetProcesses(machineName); //can't refactor farther because of internals.

//"the value of the LINQ expression being returned is an IEnumerable<Process> object that uses "yield return" under the hood" (thanks Mehrdad!)

return from p in processes where String.Equals(p.ProcessName == processName, StringComparison.OrdinalIgnoreCase) select p;

/* the stuff above replaces the stuff below */

//ArrayList list = new ArrayList();

//for (int i = 0; i < processes.Length; i++)

//{

// if (string.Equals(processName, processes[i].ProcessName, StringComparison.OrdinalIgnoreCase))

// {

// list.Add(processes[i]);

// }

//}

//Process[] array = new Process[list.Count];

//list.CopyTo(array, 0);

//return array;

}

This is a really interesting topic to me and I'm interested in your opinion as well, Dear Reader. As parts of the .NET Framework are being extended to include support for asynchronous operations, I'm wondering if there are other places in the BCL that should be updated to be more LINQ friendly. Or, perhaps it's not an issue at all.

Your thoughts?

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

The other more severe thing that I hope I'm wrong about is the way you say O(3n). These nested for loops seems to have a O(n^3) which is totally different from O(3n) = O(n). What you're showing implies a O(n^3) to me, so if you don't mind, please clarify that.

Regardless of the algorithmic stuff, the post is interesting.

However, my biggest takeaway from this was that I'm a loser for still not being in Google+.

Double posting to agree with this. The main thrust of O notation is that linear multipliers are ignored. But w/ever.

3(n*1) == 1(n*3)

There is a slight win with memory due to not having to alloc intermediate arrays for each function, but I doubt it'd make much difference.

The only big difference I see is if you put a .First() on the end. The linq way would avoid evaluating the rest of the items in the list where the nested loops wouldn't.

Don't get me wrong, I much prefer the linq version, but I just don't agree with the Big O argument.

As such, perhaps this is going to sound like a lot of nitpick, but there's a LOT of it. Maybe my college days are further behind than even I would want to admit, but:

:: Isn't the whole point to O(n) that it is actually O(cn) for some constant c ~ where c can get stupid big in a lot of ways? In other words: O(10n) ~ O(3n) ~ O(n), and all better than O(nlogn), O(n^2), etc... because Big O notation is worried about BIG n.

:: The reference to O(n2) [n-squared] is spurious and misleading; or possibly I missed something?

:: The box in a box diagram is, in my mind, more evocative of O(n^3) [n-cubed] than O(n) for c=3. The code you show isn't forloop-inside-a-forloop, it's forloop-then-another-forloop

Apologies, but I found the treatment to be very distracting from the otherwise good points.

In practical coding and analysis, though, the difference between N and 3N iterations can be huge!

1) line 30 reads "return from p in processes where String.Equals(p.ProcessName == processName, StringComparison.OrdinalIgnoreCase) select p; "

it should be

"return from p in processes where String.Equals(p.ProcessName, processName, StringComparison.OrdinalIgnoreCase) select p; "

2) I tried running the LINQ version against the non-LINQ version and it came out slower. the below code uses Environment.TickCount for simplicity but when I use a high resolution timer I get similar results:

"static void Main(string[] args)

{

int start = Environment.TickCount;

for (int i = 0; i < 100; i++)

{

var myList = GetProcessesForSessionLinq("firefox", 1);

}

int stop = Environment.TickCount;

Console.Out.WriteLine("Using LINQ: {0:f2}ms", (stop-start)/100);

start = Environment.TickCount;

for (int i = 0; i < 100; i++)

{

var myList = GetProcessesForSession("firefox", 1);

}

stop = Environment.TickCount;

Console.Out.WriteLine("Using Old: {0:f2}ms", (stop - start) / 100);

}

public static IEnumerable<Process> GetProcessesForSessionLinq(string processName, int sessionID)

{

//var processes = Process.GetProcessesByName(processName);

var processes = HanselGetProcessesByName(processName); //my LINQy implementation

var filtered = from p in processes

where p.SessionId == sessionID

select p;

return filtered;

}

public static IEnumerable<Process> GetProcessesForSession(string processName, int sessionID)

{

var processes = Process.GetProcessesByName(processName);

//var processes = HanselGetProcessesByName(processName); //my LINQy implementation

var filtered = from p in processes

where p.SessionId == sessionID

select p;

return filtered;

}"

For my machine, LINQ was 6ms, Non-LINQ was 5ms.

To be clear, it can be argued that O(4n) is just O(n), cause it is. Adding a number like I am isn't part of the O notation. I'm just saying we want to avoid O(xn) situations where x is a large enough number to affect perf.

for (int i = 0; i < 10; i++) { for (int j = 0; j < 10; j++) { do(); } } will run 100 times (10*10) or n^2

Battaile - Agreed. I'm concerned about big values of C that affect the interations. I realize I'm abusing Big O notation.

Danny - Thanks. I am not a math guy, I'm a coder. I'm trying to say what you said well. Practically, math or not, loops add up.

Matthew - That's good to know...too small to measure, though, eh? I wonder what it looks like with files for example, or database operations, etc.

Alan - Correct. I've fixed the diagrams, I was missing one.

I think the BCL could benefit from the great improvements since version 2 (or 3, 3.5, 4, 4.5) of the framework.

There are lots of ugly object-returning functions that could be returning nice generics.

There are lots of array-returning functions that could benefit from LINQ.

There are lots of callback-passing functions that could beneffit from Tasks.

It would be really nice to be able to only use the good stuff, and hide/deprecate the old stuff. I know you must have in mind compatibility issues, so this improvements are unlikely to happen.

Note to self. Refresh comments (and POST!) before posting own.

Better, but the diagram immediately below "Sometimes you'll see nested for()s like this, so O(n3) here where things get messy fast." is still wrong - it shouldn't say "O(3n) or more!", it should say "O(n^3)!" with clarification that the diagram is a could-be, not representative of this example.

The streaming of results using LINQ's deferred execution would drastically reduce the amount of copies required here, if you could push it all the way through the chain of methods.

Abstractly, the original execution, when flattened out, looks like:

ProcessInfo[] a = GetProcessInfos()

Process[] b = Loop_MakeProcessInfosIntoProcesses(a)

Process[] c = Loop_FilterProcessesByName(b)

IEnumerable<Process> d = Loop_FilterProcessesBySession(c)

I see three loop _executions_ but they're not nested, are they?

A LINQ version based on "yield" would essentially eliminate some allocations (by avoiding the arrays) and as a side-effect would benefit from loop unwinding (see http://en.wikipedia.org/wiki/Loop_unwinding). However it's not clear to me that we would see any performance gain because 1) .NET is extremely efficient at allocating/deallocating (consider ASP.NET's control hierarchy for a typical page, for example) and 2) any gain from loop unwinding would be marginal given such a short list of items.

Yes, maybe it could be optimized somewhat but the first rule of performance optimization is to concentrate your efforts on the biggest bottlenecks, and I just don't see one here.

The example used in this post is not a good reflection of the main point that Scott is making. There is definitely a performance gain for some reasons (including what Reed Copsey, Jr. mentioned) but they can't be visible with small numbers of items like the processes on a machine. If you test this approach with better examples (especially with objects that need realistic allocations in memory), you would get the clear results.

As of the big-O notation, it's not generally a good idea to use such asymptotic notations for programming APIs unless it's in a very abstract level because these methods usually have many dependencies that have different costs and make it hard to examine them. Instead, it's a good idea to use StopWatch or performance counters to evaluate the differences.

I've been looking for a reference to know if .NET performs loop unrolling. Do you have any reference for that? I searched but couldn't find a good one.

Appreciate your help :-)

The idea that just using LINQ will somehow make slow code faster is a pretty wild mischaracterization of LINQ. The reality is that in almost all cases, a LINQ implementation will be slower and require more memory than an equivalent non-LINQ implementation.

What you're really proposing is chaining selectors, which can be accomplished using a million techniques, deferred execution via query expressions being one.

The big win with LINQ is not performance and it's not memory. The big win with LINQ is syntax and consistency. Returning IEnumerable<T> is often beneficial regardless of whether the caller will use LINQ to consume the result.

Unfortunately, my experience suggests that abuse of LINQ is rampant in the industry today and I'm afraid that posts like this do more harm than good. LINQ is a very powerful tool, and IMO, it should only be wielded by those who have taken the time to understand it well.

Focus on the real performance wins here:

1) Eliminating multiple (especially nested) loops over the same data is a useful endeavor and is often quite easy.

2) Yielded execution of an expensive enumeration can help when it is possible to terminate early.

I don't see any LINQ-specific perf gains in this example.

Hopefully that helps focus the discussion in a productive way.

Here is an MSDN article that briefly mentions loop unrolling in the JIT compiler.

http://msdn.microsoft.com/en-us/library/ms973838

Great post as always, but let me throw a different perspective into the pot.

I write the core libraries for .NET used by my company and it's 40-50 devs. They're great devs, I like to think they're above the curve. Despite that I love C# because of it's type safety, so many potential bugs are removed at compile time.

.NET 1 removed the biggest source of bugs - memory management.

.NET 2 gave us generics which strengthened type usage. At this point you.could give code to a junior dev and know he'd have to work hard to mess it up.

Now I'm a performance guy, it's my main role - and like you, after I reduce the power it's all about the constant. But the truth is we're lucky if we matter 2% of the time. With the speed of hardware these days and the cost of RAM, performance and memory management play second fiddle to stability and maintainability - much as it pains me to admit.

I love LINQ, I love deferred execution. My libraries are littered with IEnunerable<T>! I prefer keeping my SQL in a DB, and staying fluent, and wish the language spec. hadn't been butchered but I'll live.

Over time though my core libraries have also become littered with something else - .ToList().

The reason is simple, when you're not the consumer of your enumeration, deferred execution bites you in the maintenance butt. The problem is people expect a method to give back a snapshot. It gets worse when the underlying source is constantly changing, the less experienced quickly introduce bugs that only occur under load as the runtime complains about enumerating a modified collection. I've seen that particular bug in production code too often. (The TPL concurrent classes have helped - by effectively snapshotting for you).

So should we update the BCL? Absolutely, but let's start with fixing those classes that use object everywhere that go back to before generics.

Then lets expose more asynchronous methods (you know the ones we want). After years of Wintellect I cheered for the Async CTP! (Fingers crossed you deliver on a well thought out Exception mechanism)

After that we can go back to adding enumerables but maybe we suffix them all 'Deferred' or something - after all some devs never learn.

Here's a +1 for maintainability and a -1 for more magic under the hood. (We can always go back to playing with Expressions if we're bored).

I agree that using Linq careless may cause lots of problems.

Debuggin LINQ anyone.. ?

I would like to +1 everything @Craig said. Unfortunately, I fear magic under the hood sells more copies of Visual Studio than a more maintenance-friendly BCL would.

*Unanticipated by the developers using them. For the most part the "side-effects" are well documented and easily understood by anyone who takes the time to go deep.

We need to separate O(n^2) from O(2n) from the actual performance impact. O(2n) is O(n), because constants aren't ever part of big-O notation. So in the strictest sense, this reuse of arrays and loops is O(n). In practical terms, though, the constant does matter, because it does have an impact on performance (and memory consumption!), so using deferred execution eliminates the constant (or at least reduces it, until you run into internal APIs that you can't/don't want to re-write, as in Scott's example blog post).

This has a strong parallel with the Build conference this week: everything in WinRT which can run a long time is now async only. The Windows (and Visual Studio) team realized that the problems with writing code which is and/or consumes async things were basically tooling problems. C# 5 and VB 5 added await and async, and we all win. Deferred execution is the same thing: linear code gets churned and turned into a finite state machine that implements IEnumerable; while it was possible to hand-craft enumerator implementations which did deferred execution in the past, it was really LINQ and .NET 3.5's addition of "yield return" that made it doable by mortals.

It's purely academic to argue that O(1n) == O(3n) (or O(10000n)). It's a fine thing to be concerned with in an academic sense, but in the real world we have performance and memory allocation issues to consider.

The Fibonacci example was one I gave to Scott. What if you wanted to print out the first 10 #s in the Fib sequence? Obviously, we could calculate them all and return them in a 10-element array, and that's not an unreasonable implementation. However, it's also less useful, because either the algorithm is hard-coded to return you 10 results, or it requires you to pass an upper limit (since Fib is an infinite series; ignore the limits of int for a moment).

Using deferred execution, you can return an IEnumerable<int> of the Fib series and not be concerned with when to stop. The end user can either stop the enumeration by hand when they have enough numbers, or use a limiter like LINQ's "Take()". Think about all the ways you might want to slice your query of the Fib series ("Skip the first 20 entries, then give me every result which is less than 1000") and you can see that the LINQ version is clearly much more flexible than trying to design a GetFib API with complex querying support.

The secondary issue is one of memory allocation. What if you wanted 1,000 Fib numbers, but you just planned to print them out? In the non-deferred execution version, you must wait for the algorithm to calculate them all, plus allocate a 1,000 item array to return them all to you. In the deferred execution version, you're only ever returning a single value at a time, which significantly reduces your memory footprint.

Third, the non-deferred execution version doesn't allow shortcutting. There are several LINQ methods which shortcut automatically (First, FirstOrDefault, Single, SingleOrDefault, Any, etc.), and it's trivial to write others (like a custom version of Where which knows that your numbers are always increasing, so it can short-circuit when you've already exceeded your maximum).

Finally, there's the issue of the actual time spent doing the loops. Whether your primary loop goal is O(n) or O(n log n) or O(n^2), we can all agree that running the loop multiple times will take longer than running it once. Yes, perhaps this example wasn't sufficient to highlight the point, but the point still remains. Regardless of the general efficiency of your algorithm, the constant -- which academically uninteresting -- may actually manifest in a real, measurable performance problem. Knowing about deferred execution means you can help eliminate these inefficiencies when you discover them. Having the BCL (or your own home-rolled APIs) use deferred execution allows the callers to make the important performance decisions, and means you don't incur unnecessary memory issues on them, either.

The reason is simple, when you're not the consumer of your enumeration, deferred execution bites you in the maintenance butt. The problem is people expect a method to give back a snapshot. It gets worse when the underlying source is constantly changing, the less experienced quickly introduce bugs that only occur under load as the runtime complains about enumerating a modified collection. I've seen that particular bug in production code too often. (The TPL concurrent classes have helped - by effectively snapshotting for you).

I believe the contract for IQueryable<T> implies "live calculation" a bit stronger than IEnumerable<T>, so perhaps Scott's example should've been returning IQueryable<T> instead (even though it makes no technical difference to the points he makes). What have you trained your developers to think about the differences between IQueryable<T> and IEnumerable<T>?

Note that deferred execution is not new with .NET 3.5 and LINQ. It just became MUCH easier to write. You were always free to implement your IEnumerable<T> in a deferred way (and in fact, the contract of IEnumerable<T> is by definition deferred... it's just that pre-allocated data structures like arrays are themselves not).

What have you trained your developers to think about the differences between IQueryable<T> and IEnumerable<T>?

Real world experience speaking here... I respect your views tremendously, but that sentence demonstrates lack of awareness of how much training most developers get or seek around the tools they are using. Particularly in the face of Scott's recent comments that we all should be polyglot programmers, it only becomes less likely that the average developer will understand the idiomatic nuances of a particular language or framework.

If I could train all the developers around me, it would probably be that IEnumerable<T> := Use Caution while IQueryable<T> := Dangerous Curves. Not because they are bad conventions, but because they have serious implications that need to be understood.

I know this isn't what we're supposed to say. We're supposed to say that programmers are smart and awesome and spend 100 hours a week building sweet open source distributed key-value stores in addition to the 80 hours a week spent building clean, efficient, enterprise SaaS solutions - but that isn't the reality I live in and I'm not certain it's a reasonable expectation of the industry.

For those of us who are committed to the .NET platform, who love C#, and who want to dig deep to get the nuances, please keep building great features. Just understand that when those features come with a lot of conditions, it's a small subset of the developer community that are able to use them without doing themselves harm.

As you say yourself BOTH IEnumerable & IQueryable are by definition deferred. Their difference is down to the additional query support.

My argument was about 'average' developers (without being disparaging). To them IQueryable is more about LINQ to SOL vs LINQ to Object/XML than any distinction around deferral. They are unlikely to have encountered Expressions.

Even experienced developers get caught out by deferral, just like memory management it is a rich source of subtle bugs.

To be clear, my devs pass over 50+ Microsoft Certified exams a year. On average a new dev will pass 2 in their first year. We sit so many I've just opened a new training and examination centre, so it's not about training.

Enumerables are powerful but they are difficult to debug and difficult to validate at compile time. I'm not saying don't use them, I'm saying understand the end user.

And as a rule of thumb - if you expose an IEnumerable outside an assembly AND that enumeration is mutable then you should probably snapshot it. That rule of thumb suggests that the BCL isn't necessarily a candidate for upgrading.

We banned LINQ to SQL on the grounds that it breaks encapsulation and produces sub-optimal queries when compared to writing sprocs. Sprocs are far more maintainable especially when you have performance issues on a major clients production servers at 2AM Christmas Day.

It's a bloodbath in the trenches!

Jostein claims that the bug is due to the .SessionId property itself iterating over all the processes on the machine.

Process.SessionId calls Process.EnsureState, which in theory executes the following code:

if (((state & State.HaveProcessInfo) != ((State) 0))

&& (this.processInfo == null))

{

...

ProcessInfo[] processInfos = ProcessManager.GetProcessInfos(this.machineName);

for (int i = 0; i < processInfos.Length; i++)

{

...

However, since Process.GetProcessesByName() initializes each of the returned Process objects by passing in the relevant ProcessInfo, which would cause the nested loop above NOT to be called.

In fact, I just stepped through Process.EnsureState (v4.0) and indeed it DID NOT execute the nested loop, so I don't think Jostein is telling the whole story...

I totally disagree with your argument about setting O(xn) to O(n). It's not about academic and real world. It's about the truth of mathematics. You're using something that is coming from academia (if you call it that way) so you need to follow the same rules and approaches followed which is pure mathematical stuff.

And it makes a lot of difference indeed.

1) iterating over an array 3 times is NOT O(n^3). it's O(n).

2) filtering an array into intermediate temporaries is NO MORE CPU-intensive than nesting multiple .Where clauses. The only difference here is the allocation of the temporary arrays vs. the allocation of the iterators/lambdas.

consider the two methods in the following code:

static void Main (string [] args)

{

var items = Enumerable.Range (0, 100000000);

var predicates = new Predicate<int> []

{

i => (i % 5) == 0,

i => (i % 3) == 0,

i => (i % 2) == 0,

};

Stopwatch sw = new Stopwatch ();

sw.Start ();

var results1 = new List<int> ();

foreach (var item in items)

{

var fMatch = false;

foreach (var predicate in predicates)

{

if (!predicate (item))

{

fMatch = false;

break;

}

}

if (fMatch)

results1.Add (item);

}

sw.Stop ();

Console.WriteLine (sw.ElapsedMilliseconds);

sw.Restart ();

foreach (var predicate in predicates)

{

var tmp = new List<int> ();

foreach (var item in items)

{

if (predicate (item))

tmp.Add (item);

}

items = tmp;

}

sw.Stop ();

Console.WriteLine (sw.ElapsedMilliseconds);

}

the first method is essentially what the LINQ query is doing. and the second method is what the old-style temporary array method is doing.

granted, LINQ has distinct advantages:

- the API is cleaner, composable

- there's less temporary array allocation (at the expense of iterator allocation)

- arrays are bad (mutable, covariant)

but the performance is not significantly different

EVERYONE: I am not sure I said anything about performance in this post. I used terms like "inefficiently." This isn't a post that says "it'll be faster if you use LINQ."

This is a post that says "LINQ is a goodness and it's better if the underlying API is ready for it."

Although this 'lazy' computation model does have a positive effect on performance, I think the greater significance is the way it changes how you write code. It's hard to explain how this works in practice in a short space, but...things become a lot easier to compose when you don't have to put artificial limits on computation explicitly everywhere.

Although LINQ doesn't turn C# into Haskell, it does give it some of that character. I was working on an app the other day that had a treeview that was essentially an entity model explorer. Due to the nature of the model, Employees have Positions and Positions have Employees and that sort of thing, the tree had effectively infinite depth. Long story shorter, I data-bound the treeview to a LINQ query and everything just worked. I think this would have been much harder to setup without LINQ.

Surely given this assumption we can discuss the internal structure of the set of algorithms whose time complexity is O(n). The claims are both true and relevant to Scott's points. What more can you justifiably ask for from a non-mathematician?

Furthermore, we can prove that O(3n) is always slower than O(2n). This actually makes it a stronger comparison of the irrelevant inter-complexity comparisons that people are hoisting. So again, what more can you really ask for here?

My problem with using LINQ everywhere, is that it's not as smart as you think it is: "ints.Where(x => x % 2 == 0).Where(x => x < 100).ToList()" doesn't get turned into the nice loop:

var result = new List<int>();

foreach (var item in ints) {

if (x % 2 == 0 && item < 100) {

result.Add(item);

}

}

It is only:

// first .Where():

Func<int, bool> pred1 = x => x % 2 == 0;

var where1 = new WhereEnumerable();

where.source = ints;

where.preds.Add(pred1);

// second .Where():

var where2 = new WhereEnumerable()

Func<int, bool> pred2 = x => x < 100;

// Chained Where()s will not chain their WhereEnumerables,

// but they *will not* merge their predicates.

where2.source = where1.source;

where2.preds.AddRange(where1.preds);

where2.preds.Add(pred2);

// source.ToList() is simply new List(), foreach .Add()

// (assuming source is not an array or IList? or was it ICollection)

// However, foreach uses .MoveNext(), which for a WhereEnumerable is:

bool WhereEnumerator.MoveNext() {

while (source.MoveNext()) {

foreach (var pred in preds) {

if (!pred(source.Current)) goto next;

}

return true;

next:

}

return false;

}

// Therefore, .ToList(), with the IEnumerable culled, is *really*:

var result = new List();

while (source.MoveNext()) {

foreach (var pred in preds) {

if (!pred) goto next;

}

result.Add(source.Current);

next:

}

Do you see the problem now? With complex queries with lots of simple expressions, you are spending most of your time going into and out of *multiple* .MoveNext()s and invoking Func<T, bool>s. Due to cache coherency, it's normally *faster* to do multiple passes over flat arrays than all this method calling (especially given that GC basically makes allocations free).

TL;DR: LINQ is for maintainability and expressiveness, *not* performance.

That said, it always amuses me when developers claim a reduction in object allocations as one of LINQ's benefits. For the sheer number of heap allocations, I find the opposite is often true. Granted, if you consider the total number of bytes allocated then you probably come out on top. But there's no doubt about it: LINQ generates a lot of garbage.

I was curious whether Scott's solution actually resulted in fewer allocations, as some earlier commenters suggested. I wrote an alternate implementation based on the first version of 'GetProcessesForSession' at the very top of Scott's post (the one from Jostein's blog) and placed it side-by-side with Scott's version. I rewrote Scott's code such that it was easier to see where the allocations are taking place (while preserving the actual LINQ operators used). I also added code to actually iterate over the results of both implementations.

Now, I'm pretty tired, so I may have missed something, but I count a minimum of 8 heap allocations for the version based on Scott's code, and only 6 for the version which uses .NET's GetProcessesByName() implementation. This assumes you actually iterate over the results.

// Minimum heap allocations: 8

internal class HanselmanProgram

{

private static void Main()

{

var processes = GetProcessesForSession("chrome.exe", 1);

// 1: IEnumerator for 'processes'

foreach (var process in processes)

Console.WriteLine(process.ProcessName);

}

public static IEnumerable<Process> GetProcessesForSession(

string processName,

int sessionId)

{

// 1: array returned by Process.GetProcesses()

var processes = Process.GetProcesses(".")

// 3: IEnumerator for array, delegate, compiler-generated IEnumerable

.Where(p => processName == null || p.ProcessName == processName)

// 3: IEnumerator, delegate, compiler-generated IEnumerable

.Where(p => p.SessionId == sessionId);

return processes;

}

}

// Minimum heap allocations: 6

internal class AlternateProgram

{

private static void Main()

{

var processes = ("chrome.exe", 1);

// 1: IEnumerator for 'processes'

foreach (var process in processes)

Console.WriteLine(process.ProcessName);

}

public static Process[] GetProcessesByName(

string processName,

string machineName)

{

// 1: array returned by Process.GetProcesses()

Process[] processes = Process.GetProcesses(machineName);

// 2: 'list' and the array wrapped by 'list'

ArrayList list = new ArrayList();

for (int i = 0; i < processes.Length; i++)

{

if (processName == null || processName == processes[i].ProcessName)

list.Add(processes[i]);

}

// 1: 'results' array

Process[] results = new Process[list.Count];

list.CopyTo(results, 0);

return results;

}

// 1: Compiler-generated IEnumerable (result of rewriting the iterator method)

public static IEnumerable<Process> GetProcessesForSession(

string processName,

int sessionId)

{

var processes = GetProcessesByName(processName, ".");

for (var i = 0; i < processes.Length; i++)

{

var process = processes[i];

if (process.SessionId == sessionId)

yield return process;

}

}

}

Note that the minimum of 6 allocations for the non-LINQ implementation only holds as long as there are no more than 4 processes matching the name provided. If you cross that threshold, the ArrayList's internal array gets replaced with a larger array (with a length of 8). Assuming you have 16 or fewer processes matching the name provided, the non-LINQ implementation meets or beats the LINQ implementation with respect to total number of allocations.

Granted, the number of allocations occurring would not be a concern for the vast majority of code. It can, however, make a difference in very hot code paths.

Is using the words "Order N" or "Big O" speaking about rigorous math? I didn't think so, but it does give me pause for the future.

I appreciate the support, Chris!

Roughly, use big O if:

1. you don't care about constants, or

2a. you are comparing to algorithms with different O, say O(log n), O(n^k) or O(k^n), *and*

2b. you are talking about large values of n

otherwise just use "3n" (or whatver), "O(3n)" is never useful!

If I cared about the mathematical use of big O, I'd be saying you that since O specifically means worst case, you would likely care more about it's big theta, which bounds it's best case as well - effectively average case.

Sometimes there's too much focus on avoiding a "breaking change" sometimes in .Net... and after a few generations of this things seem to get forgotten altogether by MS.

LINQ can serve both styles well. Older style can serve only one.

Obviously BCL is a big part of being productive with .Net.

btw: I was crunching some numbers on your team sizes. 40K * 200 devlopers equals about 9 million a year investment (old Silverlight numbers from 2010. 40 K is a guess for an average wage)?

How many people would you allocate to a mop up like you suggested? I think it's a worthwhile idea. Don't do it by halves though.

btw: hearing hard numbers on team sizes gives us a sense of how commited MS is to various technologies. Perhaps that's senseitive info that you cant release very often.

If you get used to thinking this way, your source code is easier to read for its intent: you don't have to follow what the bits do and after a while get that click in the brain that tells you whether the comments just above the code are still current or not. ;-)

Also, just as with relational database queries, if the system gets "smarter" (gets more features, like PLINQ for parallel processing), you can often leave that query code largely intact while still easily take advantage of the new smarter features. Compare that to make a piece of "bit shoving code" suitable for multicore machines.

Having been "raised" on Gofer/Haskell at uni, I was ecstatic when it clicked in my head that LINQ "had nothing to do with SQL" per se, but was more like the natural evolution of the yield statement. It really was something that allowed me to program in C# like my mind was already working. It was similar to finding out that VB6 indeed had an Implements keyword that would enable me to easier implement a lot of the GoF patterns.

Please spend the time with functional programming until it "clicks" in your mind; this could take about 6 months of exposure seemingly without anything happening, just as the pure imperative to "true OO" click might have needed before. You'll never program the same way again...

But maybe I'm just biased here: followed courses from Eric Meijer at Utrecht University. Hi, Eric! ;-)

And for another cool trick LINQ does: it works on infinite sequences. Check out http://peshir.blogspot.com/search/label/IEnumerable for a cool and possibly practical example.

Arrays are a part of the language that computers speak. LINQ is not. Low level calls will return arrays and wrapping it does not make too much sense to me. If you need it do it, but I see no reason for having the framework doing it.

1. Returning to Scott's original discussion - there was the idea of returning IEnumerable from the BCL. There has been a lot of discussion about O(whatever), but another point is: obviously this is nice since you're able to do First() and so on. Or - is it? I'd argue it's important to consider what different types convey (albeit through suggestions) to the API consumer. Unfortunately I believe this "vocabulary" is somewhat limited. An array tells me: "this is a pice of memory allocated for you only - it's already there and it's purely yours". An IEnumerable<T> tells me "you're probably going to benefit from not looping over all data, you might be sharing this, it might be lazy" (constrast this with ICollection for instance). I'd be like "wow...super...I THINK this method is just returning results as they arrive from the underlying API, and not all in one - albeit synchronosly". What we actually DO want to convey as BCL writeres here though is: all processes are already fetched, there's nothing you can do about it. Thus you would NOT benefit from just looking att the first element. Thus, I believe IEnumerable<T> is misleading

2. Sure, Scott suggested that he thought more of inefficiency and less of performance. But both efficiency and performance needs to be considered with the garbage collector in mind. Closing your eyes to that fact just seems narrow minded to me. If we REALLY wanted to do things efficiently, we'd change the underlying API to return results as they arrive. If that's not a possibility, we can think of ineffecient memory allocations, but that may well be a fallacy. Memory allocations on gen 0 are extremely cheap. Allocating a few iterators (very small) and WhereEnumerables (small) is not going to be a problem. But arrays that are used for a really short time aren't either. Structures that are used longer ARE however. Hence, the seemingly inefficient idea of allocating new objects all the time aren't that inefficient (given that the arrays are relatively small). Note that this holds quite true even though we chain a lot of filters and thus create a lot of arrays, as they are available for garbage collection all the time.

I came across a situation whereby nested Linq aggregation queries on a chunky set of data were performing woefully. It dawned on me that this is because I had nested linq queries built upon other linq queries. The inner ones were being re-evaluated on every iteration, taking several seconds. By unwinding these and pre-evaluating the queries by converting them ToList(). I got N^x performance benefit to my aggregations (it dropped to milliseconds from seconds).

The other thing I find myself sometimes having to do is put the linq hammer down. If one follows the functional programming mandate that Linq should not mutate data in place, but only project it, you can end up jumping through a lot of hoops making that work for a problem, and whilst this may scale nicely if you have a number of cpus tending to infinity and a data set doing the same, its can be pretty slow compared to stepping back for a moment and just using a foreach loop to do the work.

Yes, we are aware of that problem. But as Scott already wrote in his blog post: there are literally thousands of APIs in the .NET Framework that were added over the last decade. Although our team tries very hard ensuring every API holds up to our standards (.NET Framework Design Guidelines) there are areas we simply got wrong or in which we made design trade-offs that were based on properties that no longer hold true. Using arrays in public APIs is a good example of the latter: creating strongly typed wrappers around all untyped collections in the pre-generic world was not something we wanted to do so we opted for using arrays in quite a few places. Another example of this is Reflection (Type.GetMembers and friends).

Unfortunately, we cannot easily change the existing APIs without breaking compatibility. Adding additional overloads that would expose IEnumerable<T> seems like a viable option but we need to consider the additional cost and confusion we generate by effectively duplicating the API surface. For some core areas we did this in the past, for example File.ReadLines but this is something we do not want to apply on a large scale.

The good news is that we generally apply the better design moving forward. You can clearly see this in the new .NET APIs that we expose to developers of metro style apps. In Krzysztof Cwalina's BUILD session you can find more details. For example: in the new reflection APIs we replaced the array based APIs by Linq’s deferred execution model.

Of course, none of this means that we do not want to hear about issues in our APIs – if there are areas which are painful to use, we would like to hear your feedback.

{

int x = 30;

int y = 12;

int j = 0;

var q = from a in data.Tables

select a;

count = q.Count();

for (int i = 0; i <= count; i++)

{

j = i + 1;

Button bt = new Button();

bt.Size = new Size(200, 74);

bt.Top = x;

bt.Left = y;

bt.Name = q.First().ID.ToString();

bt.Text = q.First().Name.ToString();

x += 173;

if (j % 6 == 0)

{

x = 30;

y += 84;

}

pnButton.Controls.Add(bt);

}

Comments are closed.

However, in this case, the problem is really an internal framework problem. Even without changing the API, this method call could remove one entire loop internal in the framework. That would be a good improvement, as well. I suspect an audit of many of the API calls that were around in 1.1 would turn up quite a few potential optimizations that could be made.