Stop, think, research, debug

I got this great letter from a listener/reader recently. They listened to a recent show on Debugging Windows and it helped them debug a problem at work, but not in a specific technical way. Instead, it changed how they thought about their approach to the topic.

By the way, I've had some amazing guests on lately. If you haven't subscribed, it's a good time to join us. Explore the archives and check our our diverse topics and voices and subscribe. Also, BTW there is a new This Developer's Life out so check that out also.

I've been doing Hanselminutes: Fresh Air for Developers for almost 500 episodes over darn-near 10 years. Getting emails like this is so meaningful, especially when I think about taking breaks or stopping. Sometimes a few shows will go by with no comments and I'll wonder if anyone listens, and then I hear from a Real Live Human who was helped by all this free content I put out and it keeps me going. So first, thanks to all of you for this, if you've ever emailed or donated to fight diabetes.

Here's what this particular listener said, with emphasis mine.

Scott,

After listening to your podcast with Mario Hewardt earlier this week on Windows Debugging, I had some of the things you were talking about running through my head. Though I've always come away from your podcasts feeling enriched and excited to tackle new and interesting problems, this was the first time that it had a direct impact on my work so soon after listening.I work at a big data company that does a lot of social network analysis. We use ElasticSearch in our stack, and we are consistently processing millions of documents using complicated, user generated queries. A release we put out late last week allowed for many, larger, even more complicated user queries, which in turn led to substantial slowdown of our product. Though the code only existed in our staging environment, we are on a deadline for release early this next week. As it became obvious that the application was spending a LOT of time in the code my team was responsible for, we were tasked with "fixing" it ASAP.

I took the first shift, and though my brain immediately started coming up with ways to improve our code, something about your podcast regarding "know the tools your tools are built on" was stuck in my head. Instead of jumping in and optimizing what I was already comfortable with, I spent an hour researching the internals of the ElasticSearch functionality we were relying on.

Not sure how familiar you are with ES, but it distinguishes between searches that simply return a set of documents that match a query, much the way that traditional SQL databases do, and searches that return how well documents match a query, for ranking purposes. As it turned out, we were inadvertently using one of the latter ones, meaning when we provided X giant queries in an OR block, even though it was an OR block, which we expected would short circuit as soon as it returned a TRUE condition, it processed all X queries to determine how well each document matched. My big O notation is a bit rusty, but suffice it to say, it was one of the bad ones.

Instead of a gigantic fire drill app optimization over a weekend, it turned out to be an hour of research followed by switching the word "bool" to the word "or". It's remarkable how the most efficient coding you can do is often stopping and thinking about the problem for awhile!

Anyway, thanks to both you and Mario for saving me and my team a bunch of time!

This was a great reminder to me as well. Research is hard. It's not as dynamic as interactive debugging but it can often save you many wasted hours. Truly successful debugging means doing whatever it takes to understand the problem domain and the code paths.

Do you have any tales of debugging where taking the time to really understand the problem domain saved you time? Or perhaps the opposite, where you just dove in and poked at some code until it worked? Don't be ashamed, I think we've all be on both sides.

Sound off in the comments!

Sponsor: Big thanks to Infragistics for sponsoring the feed this week. Responsive web design on any browser, any platform and any device with Infragistics jQuery/HTML5 Controls. Get super-charged performance with the world’s fastest HTML5 Grid - Download for free now!

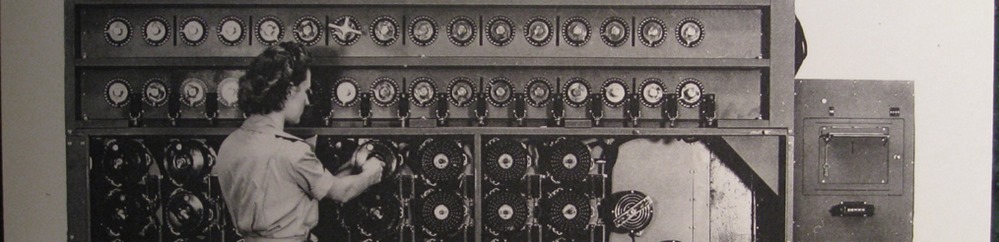

* Photo - US Navy Cryptanalytic Bombe by brewbooks, used under CC

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

Sometimes trying to do research into the base product and learning about it, I will come across someone with the same issue I had -- solution.

Mind you, I'm not complaining about having the answer at my fingertips, but going back to a question you posed once upon a time -- am I a programmer -- or just a good Googler?

I'm surprised the error list that shows after a compile aren't just hotlinks to Google search.

Much thanks for This Developer's Life and Hanselminutes, you have one of the few "developers are human" podcasts available.

@Michael Rumpler - awesome debug ;-)

Do you have any tales of debugging where taking the time to really understand the problem domain saved you time?

When it comes to memory dumps you are forced to do this - you can't poke at code and do quick tests because you no longer have a live process (or even the environment the process was executing on). You have to learn the minutiae of any frameworks/runtimes you are working against.

Next time you have to debug something see if you can manage it without a live process. You'll multiply your knowledge in the process: even knowledge about your own code.

Debugging - vNext, Mono, xUnit ... On Linux - Part 1

Debugging - vNext, Mono, xUnit ... On Linux - Part 2

The inserts which use the blockquote tag are losing the end of the line, this includes the section containing your listeners reply in the "Stop, think, research, debug" article.

Works fine in Firefox.

We finally asked for help. We were put in touch with the person that led the WPF performance team in Redmond. We emailed him memory dumps. We went over all of his known issues on different hardware/OS combinations. Finally, one of our engineers decided to just try one of the solutions having to do with the render thread and the *first* Window object instantiated in the app (subsequent Window objects would not have this problem), even though we had ruled this out as a root cause, because it was only supposed to happen on WinXP-SP3, and we had recreated it on Vista... Turns out there was an error in the blog post. It *did* happen in Vista. In fact, the memory leak occurred no matter *what* was being rendered, even a blinking cursor.

One line of code to instantiate a new Window(), and do absolutely nothing with it, plugged the leak. We made graphs of memory allocated over time, when the app was sitting idle, both without the fix and with the fix. One of them showed a stair-step pattern. One of them showed a flat line. We emailed this to the CTO who had voiced concerns, the launch went forward, and now it is running successfully in hundreds of hospitals around the world.

How many times have we started a problem investigation too far down in the weeds ("I could make sure my service is running but maybe I'll just turn on kernel pool tagging first").

This bit of wisdom is what binds us to every investigative profession from auto mechanic to brain surgeon.

Together with .net rocks, it's my main technical podcast. It strikes the balance between information and inspiration and gives me a window to the things besides the ones I use in my day to day job.

One of the reasons comments are so scarce is that they are consumed on times where commenting is just impractical (biking, running, workout, general multitasking).

Eventually I narrowed it down to a frequency around 60% of the full frequency range. Captions will work fine if you stop scanning before that frequency; if you scan beyond that frequency, captions won't work. Curiouser and curiouser.

Further debugging showed that the caption_enabled_flag stored in a global static structure printed different values (true and false) before and after this frequency. Yeah, memory corruption.

Couple of hours of objdumps and valgrinds later, we found a static array in the scanning module that stored the progress information for each frequency during a full scan. This array was used as a uint32_t pointer instead of uint8_t pointer in one of the functions, causing overflow. At the said frequency, this overflow starts spilling into next elements in the memory layout. One of the things it did was to set our caption_enabled_flag to false.

We made static analyzer mandatory after this.

When a problem arises, folks rushing to do *some*thing, rather than thinking a moment and doing the *right* thing.

I think it's because they don't want to admit they 'don't-just-know' the solution.

I 'just-know' very little (my brain's been full since the early 90s,) but I know I can find a solution with a wee bit of thought and research.

Very early on in my career I worked for an European company who had a software written in a scripting language and to compile the code you need a specialized editor, which would need a hardware dongle (as you would expect, vendor made money on the dongle). So the employer, never gave us the dongles, which meant we read the code and make changes and send them 5 thousands miles away to the HQ, to compile. What this tought me was

1. Write less code!

2. Spend time staring at screens and taking walkd before touching the keyboard

3. Run the code in my head.

I'm no gifted developer but whatever success I have found is because of these things I learnt early on.

So when Marketting come running complaining everything is slow on the website, And Ops + devs say, ramp up the hardware on SQL box. I can, after little poking around, point out not to encrypt table column, on which we are running LIKE queries (well, don't encrypt columns period) Or other times, find missing caching headers on expensive assets, which alone helps a lot.

Thanks for your podcasts and blog posts over the years. Appreciate it.

The interesting thing was that this happened only on specific Windows XP and Windows 7 machines with an OS hotfix which did some changes to GDI. Windows 8/8.1 did have this issue right from the start.

I finally found that it had something to do with GDI Bitmaps which were allocating 192kb of address space even for 48x48 600 byte Icons. If you have a few thousands of bitmaps around your memory soon becomes severly fragmented. This was related to a change in GDI where the Windows Imaging Component (WIC) was used to decode bitmaps after applying a specific hotfix.

Ok file mapping objects are the root cause so lets track with Windbg the aquire and release calls to find where not released Bitmaps are hiding. After setting the breakpoints in Windbg and adding some logging of the callstacks our application would become very slow and run into timeout errors because of ist new found slowness.

This failure caused me to learn new things like using a hooking library (EasyHook) to intercept some OS calls and log the method parameters and finally write the data out as ETW event. The best thing about ETW is that you can tell the OS to collect the call stacks for you. That approach proved to be ultra fast and resulted in very small ETL files even after running the application for hours. Then it was easy to parse the ETL file and filter out all unbalanced mapping calls which did relate to call stacks in our code.

This enabled us to predict which code sections would benefit most of optimizations (e.g. bitmap reuse, static Bitmaps, ...) so we could do isolated low impact changes even late in the release cycle.

My colleagues did not believe me that I could predict with these exact measurements the outcome of future changes. They were really stunned to find that my predictions turned out to be correct with an error <3%.

That was one of my best experiences that scientific troubleshooting can make a huge difference.

Did I forget something? Yes! This was my first bug report that forced MS to release Windows hot fixes ranging from XP-Windows 8.1. It turned out that the problematic hotfix was a backport from Windows 8 where the file mapping objects were allocated for no reason.

I found this post talking about promises and I bookmarked it. I went back later and read the post (slowly so that I understood it). After that, I was able to make one change the the code and run, and it worked the first time.

Making the code run correctly the first time felt good. I'm still feeling good.

We didn't have a DBA, so I finally got fed up one day and decided to figure out the root cause. I bought a book SQL Index design and spent a couple of evenings having my eyes opened to how SQL makes things fast. I also learned how to measure SQL performance using SQL Management Studio and get suggestions from the built-in query/index tuner. (I'd be willing to bet not many programmers even know that feature exists.) It turns out the few indexes we had were terrible or incorrect, and we were also missing a few. After updating the indexes and creating nightly jobs to rebuild the indexes and stats (if needed), the queries went from 30 seconds to less than a second. All because I researched the right way to index tables.

However the `or` filter your listener switched to is a special wrapper filter for when you are using filters that can not utilize (cached) bitsets, these are the script, numeric_range and geo related filters. These need to operate on all the documents anyway and this or filter wrapper allows you shortcircuit these more efficiently then the bool query. However other types of filters typically need to be placed inside a bool filter.

This blog post explains this in-deptly

With Elasticsearch 2.0 the story around filters and queries is simplified greatly. We no longer differentiate between filters an query constructs in the DSL. Both of them have been merged to queries. In addition to this the or/and/not filters have been deprecated and you are safe to use the bool query in all contexts.

This blog post outlines all the improvements to the query execution in 2.0

its good to reiterate though that an elasticsearch search has two phases

1. query, does scoring, aggregations etcetera.

2. post_filter, further filters down the documents after the query phase. This is handy if you want your aggregations to be unaffected by e.g filters a user chose to filter down the documents.

However you can still use filters inside the query phase, be sure to wrap them in a filtered query if you are using Elasticsearch 1.x. Or in the filter clause of the bool query if you are using Elasticsearch 2.0. This is often a better choice then executing the filters inside the post_filter phase depending on your use-case.

I've been working on a large-scale refactoring of our crusty old codebase (it's in Struts 1, if that gives you an idea), and after getting all code and JSPs deployed, there was still something with an invalid reference that wasn't switched. I was able to determine that it was a combination of an in-house CMS which hadn't rebuilt some of our pages from the database components they're made of, and the fact that Tomcat wasn't recompiling JSPs automatically into our Catalina work directory. Deleting the work files and re-running our CMS page generator made everything hunky-dory.

I remembered your advice while I was doing this, and marveled at the fact that a few months ago, I wouldn't have thought to check these things and would have gotten caught up for at least a day.

Comments are closed.

They rebooted their database servers every Monday night. When the database was slow on Tuesday, then they had to reboot them again to make everything running fine again.

After looking at tons of logs and analyzing execution plans it turned out that there was one employee who did not complain. The queries which this employee started every morning were very complicated and looked different from everybody elses. And this employee came to work by bicycle - except when it was raining. Then he came by car and was in the office a bit earlier.

An Oracle database optimizes its execution plans based on the first queries it sees after a reboot. When that employee came to the office by car, then his were the first queries, his queries ran ok and those of everybody else were slow. When he came by bicycle, then somebody else tackled the db first and it optimized for the more average queries.