Smart Watches are finally going to happen - Pebble Watch Reviewed

The Pebble Watch is shipping and it's revolutionary, fresh and new! Well, kind of. Dick Tracy got his first smart watch in 1946. It seems we've always wanted a computer and communicator on our wrists. I remember wanting to buy a TV watch in the 80s and we all had Casio Databank watches growing up (well, the nerds did).

The history of smart watches goes back much further of course as Microsoft Researcher Bill Buxton points out. He has a collection of smart watches going back almost 40 years!

The history of smart watches goes back much further of course as Microsoft Researcher Bill Buxton points out. He has a collection of smart watches going back almost 40 years!

My buddy Karl still wears and uses his Timex Datalink watch, originally made in 1994. Almost ten years ago I bought a Microsoft SPOT Watch from Fossil and loved every minute of it. It had news, sports, stocks, weather and the occasional email. However it didn't connect to my phone (it was mostly PalmPilots back then, and the occasional Blackberry pager) and Bluetooth wasn't a thing quite yet.

Smart Phone sales are flattening out as, well, everyone who wants one has one. There's only so many things you can sell with batteries, screens, and wireless technology, right? Moreover, young people don't wear watches! I'm a watch guy, myself, with a watch case and a growing collection. I like watches old and new. I'm often teased by my younger co-workers who declare "my phone is my watch! Why would I want something else to carry around?" Watches are such an outmoded concept, right?

I for one, think that the wrist is the next big thing. This is a market that's right on the edge of mainstream adoption and has been for at least a decade, if not the for the last 70 years!

The Pebble Smart Watch

I was a backer of the Pebble's Kickstarter campaign and my red Pebble showed up last week. It's worth noting that Kickstarter isn't a store or marketplace, but rather a place you invest in an idea and watch it grow. Many Kickstarters fail. You might invest your money and never see a return on your investment. Fortunately, the Pebble succeeded and almost a year later my investment arrived.

The boxing was very Kindle-esque and classy in its recycled granola-ness. It was simple and served its purpose well. The package included just the watch and the charging cable. Sadly, the charging cable is custom with an unnecessarily clever (and weak) magnetic connector. I'd have preferred a micro-USB port (and in fact, would for every device) so that I might travel with yet-another-unique-cable-I-can't-afford-to-lose-can't-tell-apart-at-a-distance-and-will-eventually-lose-in-my-bad. But I'm not bitter.

Initial Impressions

The watch is lovely. Truly. Considering that I paid $125 for it - a reasonable price considering its potential - I'm happy with it and would recommend it. Still, it feels...cheap. It's plastic. It's light. It's glossy and smears easily. I'm afraid it will crack or scratch if I bump whilst walking. It's fine, but it's not, well, it's not an Apple Product. It's not made of magical Surface Magnesium. It's a plastic watch with a rubber wristband.

It's a little too tall, for my taste. This causes the Pebble to be a half-inch taller than the average (read: my) wrist, causing it to stick out just enough that I notice it. I prefer the wider square style of the iPod "watch." The Pebble is not at all unattractive, but I'm assume the creators must be thick-wristed people to not have noticed this.

What do we really want? Something with the intense attention to detail and built quality of an Apple iPod Nano 6th generation combined with a Lunatik Watch Strap. Now THAT'S a fantastic watch.

The idea of an iWatch is an attractive one...and the combination of what you get today with a Pebble combined with what you get with a (now discontinued) iPod Nano is near-ideal.

The Pebble gets you:

- Low-power Bluetooth 4 connectivity

- An open SDK

- Reasonable battery life of a few days

- Vibrations

- Motion-activated backlight

- Potential!

- a 144x168 1-bit display

The iPod Nano (6th generation) "watch" gets you:

- Fantastic build quality

- A color screen

- Touch!

- FM Radio, Photos, Music, Headphone Jack

- Pedometer

- Crappy battery life of a day at best

- a 240x240 color TFT display

The Pebble effectively gives us connectivity and an SDK. If the iWatch does come - and it will - it better have all these things. Although, I expect it won't have an open SDK (Watch App Store anyone?) and it won't play well with Android as the Pebble does. Therefore, iOS people will get iWatches, and Android people will get Pebbles. Check back in six months and we shall see!

What the Pebble does for an iPhone

My main phone is an iPhone 4s. Pebble really shine on Android, I am told, as the open Android OS gives developers free reign. Still, I've been very happy with the device exactly as it is, even if it didn't improve...and it will.

Today the Pebble is a Bluetooth-connected watch that will give you:

- Vibration notifications and the full text of SMS texts sent to your phone.

- This is brilliant, and it's the vibration that is the key. I was in the movies just this evening and got a text from the babysitter. The watch discretely let me see that it wasn't urgent without removing my phone from my pocket.

- SMS notifications are totally reliable on the Pebble with iOS but email notifications barely work. They say they'll fix this soon.

- Clear, backlit display

- Go into settings, and turn on Motion Activated Backlight and the Pebble will light up with a gentle shake. This was nice at the movies tonight.

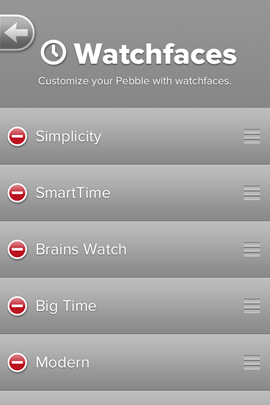

- Customizable watch faces on a clear screen that is visible in total darkness (due to its backlight) and in bright sunlight (due to its low-power memory LCD).

- It's great to be able to change the LCD screen with new watch faces, but I admit I'd have appreciated plastic colorful watch covers. It's nice I have a red Pebble, but it's forever red. Why not make the face swappable?

- Answer and Hang-up the Phone

- When paired with a Bluetooth headset, this means you can get a call, glance at your watch, see who it is, and answer it without touching your phone. iOS doesn't show the name, just the number. Again, we shall see.

This is the Pebble today. Tomorrow promises apps and better notifications.

What Pebble Needs

The Pebble needs what my SPOT Watch had ten years ago. I want:

- Weather alerts

- How about Dark Sky weather alerts on my Pebble? Surely they are working on a notification bridge for 3rd party apps?

- News, Stocks

- Breaking news and Stock price alerts would be lovely.

- Quick SMS responses

- "I'll call you back" and a few quick choices for responses to texts would be a nice time saver.

- Motion details...speedometer, GPS, etc

- This would all be using the Pebble as a "remote view" of an app that is doing the right work, but would be great for exercise.

- Calendar

- What's my next appt? Give me Google Now, but on my watch.

The Future of Pebble is Bright

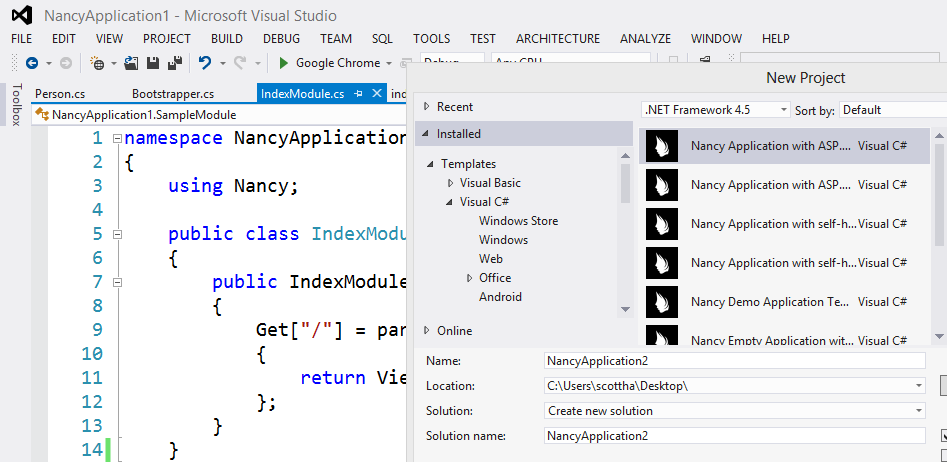

Give people an easy to use SDK and let them download (side-load) apps directly to their device and you'll get a thriving community in no time, and that's what Pebble has done. Watch faces are written in tight "C" and move PNGs around, usually. There's lots of info at http://developer.getpebble.com. One way apps are now starting to show up with two-way apps coming soon (although Pebble will be limited on iOS due to Apple-imposed limitations). Also, there's dozens of great watch faces you can get at http://www.mypebblefaces.com. You can download Pebble Watch Faces directly from your phone which acts as a bridge to the watch. The experience is two clicks, really clean and simple.

Smart Watches are the next big thing. You watch.

SPONSOR: Big thanks to the feed sponsor this week, Ext.NET (seriously, check out their demos, really amazing stuff!) - Quickly build modern WebForm and MVC (including RAZOR) Apps for ASP.NET. Free pancake breakfast with all purchases!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

_3.png)

_3.png)

_3.png)

_thumb.png)

_3.png)

_3.png)

_3.png)

_3.png)

_3.png)

_3.png)

_thumb.png)

_thumb.png)

_thumb.png)

_3.png)

_thumb.png)