Faster Builds with MSBuild using Parallel Builds and Multicore CPUs

UPDATE: I've written an UPDATE on how to get MSBuild building using multiple cores from within Visual Studio. You might check that out when you're done here.

Jeff asked the question "Should All Developers Have Manycore CPUs?" this week. There are number of things in his post I disagree with.

First, "dual-core CPUs protect you from badly written software" is just a specious statement as it's the OS's job to protect you, it shouldn't matter how many cores there are.

Second, "In my opinion, quad-core CPUs are still a waste of electricity unless you're putting them in a server" is also silly. Considering that modern CPUs slow down when not being used, and use minimal electricity when compared to your desk lamp and monitors, I can't see not buying the best (and most) processors) that I can afford. The same goes with memory. Buy as much as you can comfortably afford. No one ever regretted having more memory, a faster CPU and a large hard drive.

Third he says,"there are only a handful of applications that can truly benefit from more than 2 CPU cores" but of course, if you're running a handful of applications, you can benefit even if they are not multi-threaded. Just yesterday I was rendering a DVD, watching Arrested Development, compiling an application, reading email while my system was being backed up by Home Server. This isn't an unreasonable amount of multitasking, IMHO, and this is why I have a quad-proc machine.

That said, the limits to which a machine can multi-task are often limited to the bottleneck that sits between the chair and keyboard. Jeff, of course, must realize this, so I'm just taking issue with his phrasing more than anything.

He does add the disclaimer, which is totally valid: "All I wanted to do here is encourage people to make an informed decision in selecting a CPU" and that can't be a bad thing.

MSBuild

Now, enough picking on Jeff, let's talk about my reality as a .NET Developer and a concrete reason I care about multi-core CPUs. Jeff compiled SharpDevelop using 2 cores and said "I see nothing here that indicates any kind of possible managed code compilation time performance improvement from moving to more than 2 cores."

When I compiled SharpDevelop via "MSBuild SharpDevelop.sln" (which uses one core) it took 11 seconds:

TotalMilliseconds : 11207.7979

Adding the /m:2 parameter to MSBuild yielded a 35% speed up:

TotalMilliseconds : 7190.3041

And adding /m:4 yielded (from 1 core) a a 59% speed up:

TotalMilliseconds : 4581.4157

Certainly when doing a command line build, why WOULDN'T I want to use all my CPUs? I can detect how many there are using an Environment Variable that is set automatically:

C:>echo %NUMBER_OF_PROCESSORS%

4

But if I just say /m to MSBuild like

MSBuild /m

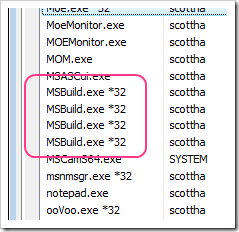

It will automatically use all the cores on the system to create that many MSBuild processes in a pool as seen in this Task Manager screenshot:

The MSBuild team calls these "nodes" because they are cooperating and act as a pool, building projects as fast as they can to the point of being I/O bound. You'll notice that their PIDs (Process IDs) won't change while they are living in memory. This means they are recycled, saving startup time over running MSBuild over and over (which you wouldn't want to do, but I've seen in the wild.)

You might wonder, why do we not just use one multithreaded process for MSBuild? Because each building project wants its own current directory (and potentially custom tasks expect this) and each PROCESS can only have one current directory, no matter how many threads exist.

When you run MSBuild on a SLN (Solution File) (which is NOT an MSBuild file) then MSBuild will create a "sln.cache" file that IS an MSBuild file.

Some folks like to custom craft their MSBuild files and others like to get the auto-generate one. Regardless, when you're calling an MSBuild task, one of the options that gets set is (from an auto-generated file):

<MSBuild Condition="@(BuildLevel1) != ''" Projects="@(BuildLevel1)" Properties="Configuration=%(Configuration); Platform=%(Platform); ...snip... BuildInParallel="true" UnloadProjectsOnCompletion="$(UnloadProjectsOnCompletion)" UseResultsCache="$(UseResultsCache)"> ...

When you indicate BuildInParallel you're asking for parallelism in building your Projects. It doesn't cause Task-level parallelism as that would require a task dependency tree and you could get some tricky problems as copies, etc, happened simultaneously.

However, Projects DO often have dependency on each other and the SLN file captures that. If you're using a Visual Studio Solution and you've used Project References, you've already given the system enough information to know which projects to build first, and which to wait on.

More Granularity (if needed)

If you are custom-crafting your MSBuild files, you could turn off parallelism on just certain MSBuild tasks by adding:

BuildInParallel=$(BuildInParallel)

to specific MSBuild Tasks and then just those sub-projects wouldn't build in parallel if you passed in a property from the command line:

MSBuild /m:4 /p:BuildInParallel=false

But this an edge case as far as I'm concerned.

How does BuildInParallel relate to the MSBuild /m Switch?

Certainly, if you've got a lot of projects that are mostly independent of each other, you'll get more speed up than if your solution's dependency graph is just a queue of one project depending on another all the way down the line.

In conclusion, BuildInParallel allows the MSBuild task to process the list of projects which were passed to it in a parallel fashion, while /m tells MSBuild how many processes it is allowed to start.

If you have multiple cores, you should be using this feature on big builds from the command line and on your build servers.

Thanks to Chris Mann and Dan Mosley for their help and corrections.

Related Links

- MSBuild Blog - The MSBuild team's blog.

- MSBuild Sidekick v2 - A visual editor, ala NantPad, for MSBuild files.

- MSBuild Visualizer - An old project of Mitch Denny's trying to make large MSBuild files easier to visualize.

- How to get MSBuild building using multiple cores from within Visual Studio

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter

"Quad-core not really needed" – I agree with Scott, for the most part, get the quad core. Except that you really don’t want your ultimate developer rig to be a gamer machine in disguise. The kids get the gamer rig. (Really, Scott, TWO high-end GPUs connected together SLI/cross-fire on the dev box? ... -)

As far as a “handful of applications” goes - Scott has this one right, too. My machine is currently running 56 processes totally more than 400 threads! (and that’s without anti-virus crap-ware). Maybe Jeff has a point with the "truely benefit" criteria, but, seriously, who is not writing multi-threaded apps nowadays?

Build Time – Always add more power to the dev boxes. It will pay off. Software engineers still cost much more than hardware.

Regards,

--

Jason

But on the performance question, here's a different one for you: if you wanted better performance, which of the following would you choose?

1) Quad-core processor, 7200k drive

2) Dual-core processor, 64GB Solid State Drive.

Hm, I don't trust SSD yet. There's no recovery story and I question the MTBF numbers. That said, I'll probaly jump in on the 2nd gen when it hits 128g, at lease for my C drive. I'll also get a newer processor for the Quad machine.

Oh, and my high-end video cards are NOT hooked up in SLI. They run 4 monitors. I don't use it for games.

I knew this would happen as soon as I read Jeff's post a few days back - FIGHT FIGHT FIGHT FIGHT!

If you are custom-crafting your MSBuild files, you could turn off parallelism on just certain MSBuild tasks by adding:

...

But this an edge case as far as I'm concerned.

That's a pretty big caveat right there. We had to ditch solution files about 1-1/2 years ago because msbuild couldn't get the dependency tree right, likely due to a size limitation (~200 projects). I suspect that's why other's generate their own msbuild files as well.

Ironic that the code bases most in need of scalable build solutions are the ones left high and dry.

VMWare can use 2 CPUs, so if you have a quad-core or more, you will benefit from the extra CPU horsepower. At one point, I was actively using 2-3 VMs on a single box...

Cheers

On the core debate, if one of the cores in the dual cored CPU is faster than one in the quad, then I'm leaning towards Jeff's reasoning. Of course, it depends on how much you load the CPU, but if most of what you do is compile code -- and since VS can't use more than one core from what you're telling us -- plus some small stuff (like listening to music, browsing, which usually doesn't even move the CPU, unless you're using Vista <g> I'm using Windows Server here), then I think the performance of a single core is more important than having 3 more cores that are not used. Mileage may vary of course...

Keep us posted on what you find!

Regarding the waste of energy: I don't think Intel does throttling per core but rather per CPU. Thus 4 cores CPU will use more energy than 2 cores CPU even though 2 cores (or less) are being used. OTOH AMD does throttling per core. I didn't actually test those assumptions :-)

I'd appreciate that. I'll dig up the details today and get them to you offline. Thanks.

Scott, if you can figure out how to apply this to normal (CTRL+SHIFT+B) builds, just think of the productivity gains if everyone builds just 10 times a day! It'll be like a little economic stimulus package for the IT industry. 10builds/day * 2 seconds * 1000 developers * 5 work days. That's like 28 extra hours of work being done and I think that's fairly optimistic.

For what it's worth, the /m parameter is only available in .NET 3.5 and above.

It's funny that I always have to read through the comments to verify that kind of stuff.

@Scott, there are still people using VS2005 and .Net 2.0 (our employers are either too cheap to upgrade or we work in non-profit[my case]) So it would be nice for posts like this to clearly denote that these are things only availble in the 3.0/3.5+ world. Or should I just assume that .Net 2.0 is dead?

Can't wait for this ability within visual studio ...

HANDS DOWN, the quad core systems are SUPER FAST. I never wait for anything on those sysem.

Also when encoding files for uploading to live.Silverlight.com, all 4 cores a busy encoding my files.

I'll never buy another dual core, ever.

Cheers,

Karl

Is there a better method to manage code in a secure manner than pre-compiled deployment?

Is there a better method to manage code in a secure manner than pre-compiled deployment?

The type or namespace name 'Foo' does not exist in the namespace 'Bar'

For fun, I tried changing BuildInParallel to false and got the same error. I should also mention that I am using this trick to build my solution: http://www.sedodream.com/PermaLink,guid,2a926fd2-70ce-4b95-a489-2d6aa24bc7da.aspx

Maybe this trick will not work with that trick?

MSBUILD : error MSB1001: Unknown switch.

Switch: /m

I have .NET 3.0 installed. Happens on both my xp workstation and on a 64bit W2K3 system. I really want to take advantage of this feature.

Comments are closed.