Announcing .NET Core 2.1 RC 1 Go Live AND .NET Core 3.0 Futures

I just got back from the Microsoft BUILD Conference where Scott Hunter and I announced both .NET Core 2.1 RC1 AND talked about .NET Core 3.0 in the future.

.NET Core 2.1 RC1

First, .NET Core 2.1's Release Candidate is out. This one has a Go Live license and it's very close to release.

You can download and get started with .NET Core 2.1 RC 1, on Windows, macOS, and Linux:

- .NET Core 2.1 RC 1 SDK (includes the runtime)

- .NET Core 2.1 RC 1 Runtime

You can see complete details of the release in the .NET Core 2.1 RC 1 release notes. Related instructions, known issues, and workarounds are included in releases notes. Please report any issues you find in the comments or at dotnet/core #1506. ASP.NET Core 2.1 RC 1 and Entity Framework 2.1 RC 1 are also releasing today. You can develop .NET Core 2.1 apps with Visual Studio 2017 15.7, Visual Studio for Mac 7.5, or Visual Studio Code.

Here's a deep dive on the performance benefits which are SIGNIFICANT. It's also worth noting that you can get 2x+ speed improvements for your builds/compiles, by using the .NET Core 2.1 RC SDK for building while continuing to target earlier .NET Core releases, like 2.0 for the Runtime.

- Go Live - You can put this version in production and get support.

- Alpine Support - There are docker images at 2.1-sdk-alpine and 2.1-runtime-alpine.

- ARM Support - We can compile on Raspberry Pi now! .NET Core 2.1 is supported on Raspberry Pi 2+. It isn’t supported on the Pi Zero or other devices that use an ARMv6 chip. .NET Core requires ARMv7 or ARMv8 chips, like the ARM Cortex-A53. There are even Docker images for ARM32

- Brotli Support - new lossless compression algo for the web.

- Tons of new Crypto Support.

- Source Debugging from NuGet Packages (finally!) called "SourceLink."

- .NET Core Global Tools:

dotnet tool install -g dotnetsay dotnetsay

In fact, if you have Docker installed go try an ASP.NET Sample:

docker pull microsoft/dotnet-samples:aspnetapp docker run --rm -it -p 8000:80 --name aspnetcore_sample microsoft/dotnet-samples:aspnetapp

.NET Core 3.0

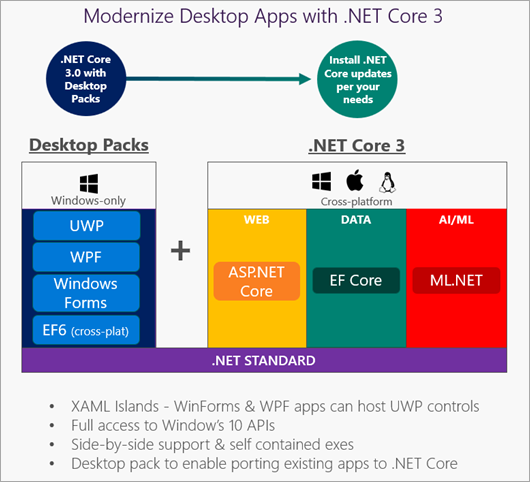

This is huge. You'll soon be able to take your existing WinForms and WPF app (I did this with a 12 year old WPF app!) and swap out the underlying runtime. That means you can run WinForms and WPF on .NET Core 3 on Windows.

"Bringing desktop workloads to run on the top of .NET Core is great. We would love to close the loop and open source them as well. We are investigating how to do that." - Scott Hunter, Director PM, .NET, Microsoft

Why is this cool?

- WinForms/WPF apps can be self-contained and run in a single folder.

No need to install anything, just xcopy deploy. WinFormsApp1 can't affect WPFApp2 because they can each target their own .NET Core 3 version. Updates to the .NET Framework on Windows are system-wide and can sometimes cause problems with legacy apps. You'll now have total control and update apps one at at time and they can't affect each other. C#, F# and VB already work with .NET Core 2.0. You will be able to build desktop applications with any of those three languages with .NET Core 3.

Secondly, you'll get to use all the new C# 7.x+ (and beyond) features sooner than ever. .NET Core moves fast but you can pick and choose the language features and libraries you want. For example, I can update BabySmash (my .NET 3.5 WPF app) to .NET Core 3.0 and use new C# features AND bring in UWP Controls that didn't exist when BabySmash was first written! WinForms and WPF apps will also get the new lightweight csproj format. More details here and a full video below.

- Compile to a single EXE

Even more, why not compile the whole app into a single EXE. I can make BabySmash.exe and it'll just work. No install, everything self-contained.

.NET Core 3 will still be cross platform, but WinForms and WPF remain "W is for Windows" - the runtime is swappable, but they still P/Invoke into the Windows APIs. You can look elsewhere for .NET Core cross-platform UI apps with frameworks like Avalonia, Ooui, and Blazor.

You can check out the video from BUILD here. We show 2.1, 3.0, and some amazing demos like compiling a .NET app into a single exe and running it on a computer from the audience, as well as taking the 12 year old BabySmash WPF app and running it on .NET Core 3.0 PLUS adding a UWP Touch Ink Control!

Lots of cool stuff coming today AND tomorrow with open source .NET Core!

Sponsor: Check out JetBrains Rider: a cross-platform .NET IDE. Edit, refactor, test and debug ASP.NET, .NET Framework, .NET Core, Xamarin or Unity applications. Learn more and download a 30-day trial!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter