Hack: Parallel MSBuilds from within the Visual Studio IDE

There were a number of interesting comments from my post on Faster Builds with MSBuild using Parallel Builds and Multicore CPUs.

Here's a few good ones, and some answers. First,

Is the developer that sits inside of VS all day debugging able to take advantage of the same build performance improvements?

Yes, I think. This post is about my own hack to make it work.

@Scott, there are still people using VS2005 and .Net 2.0 (our employers are either too cheap to upgrade or we work in non-profit[my case]) So it would be nice for posts like this to clearly denote that these are things only available in the 3.0/3.5+ world. Or should I just assume that .Net 2.0 is dead?

Certainly 2.0 is not dead, considering that 3.5 is still built on the the 2.0 CLR. Additionally, if you're a 2.0 shop, there's really no reason not to upgrade to VS2008 and get all the IDE improvements while still building for .NET 2.0SP1 as MSBUild supports multitargeting.

However, the underlying question here is, "why are you teasing me?" as this gentleman clearly wants faster builds on .NET 2.0. Well, this trick under VS2008 still works when building 2.0 stuff.

If you're using a Solution File (SLN) it's automatic, and if you're using custom MSBuild files, you can build .NET 2.0 stuff with the 3.5 MSBuild by putting

<TargetFrameworkVersion>v2.0</TargetFrameworkVersion>

under a <PropertyGroup> in your C# project. Then you can use /m and get multiproc builds. Not much changes though, because either way your build output is running on CLR2.0. (.NET 3.0 and .NET 3.5 use CLR2.0 underneath.) You will get some warnings if you try to use v3.0/v3.5 types.

It's true that parallel managed code compilation lags behind unmanaged.

Welcome, msbuild, to the 21st century. ;-) GNU make has the -j switch (the equivalent to /m here) _at least_ since the early-to-mid 1990's. We use its mingw port to drive Visual Studio 2003's commandline interface to build vcproj files and to handle dependencies, and since we got multi-core build machines, our build times have improved tremendously (we're talking about roughly 2,5 million SLOCs).

There's a number of reasons for that. For example, Visual Basic is compiling in the background all the time while you're typing in VS using a non-reentrant in-proc compiler. The in-proc C# compiler is the same way.

The Multicore MSBuild with VS Hack

This trick is unsupported because it hasn’t been tested. Off the top of our collective head, I can’t think of why it wouldn’t work OK, at least if your solution is all VB/C#. Having VC projects in your solution, especially if there are project references with the VB/C# projects, would probably not work so well. That’s because VC projects are not native MSBuild format in VS2008.

To start with, this is a totally obvious hack. We'll add an External Tool to Visual Studio to call MSBuild, then add some Toolbar buttons.

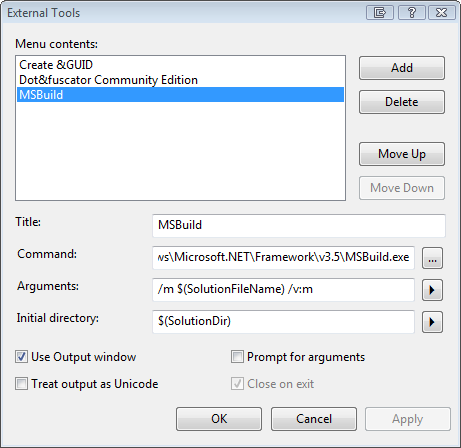

First, Tools | External Tools. We'll add MSBuild, pointing it to the v3.5 .NET Framework folder. Then, we'll add /m to the arguments for multi-proc and /v:m (where the v is verbosity and the m is minimal) to tone down MSBuild's chattiness. Finally, we'll check the Use Output Window box and set the initial directory to the current Solution's Directory.

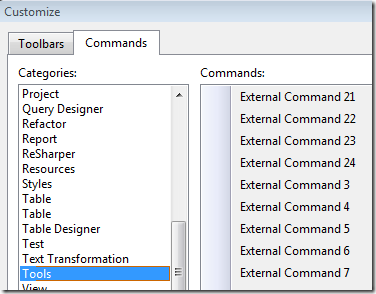

Next, we'll right click on the Toolbar, and hit Customize.

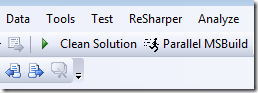

Then, from the Commands tab, drag the number of the newly added External Tool (mine is #3) to the Toolbar. I also added a little Lode Runner icon to the button. Additionally, I added a Clean Solution option, just because I like it handy.

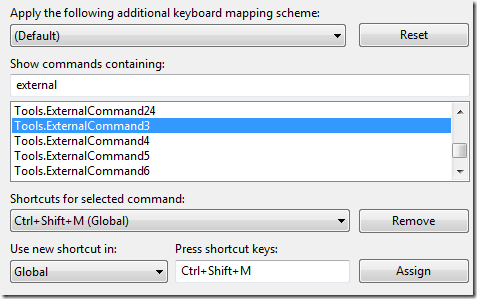

You can also re-map Ctrl-Shift-B if it makes you happy, but I used Ctrl-Shift-M, mapping it to the Tools.ExternalCommand, again, in my case it is #3.

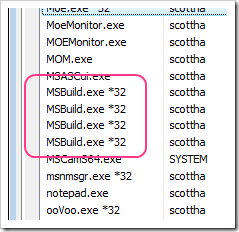

At this point, I can now run MSBuild out-of-proc from inside Visual Studio.

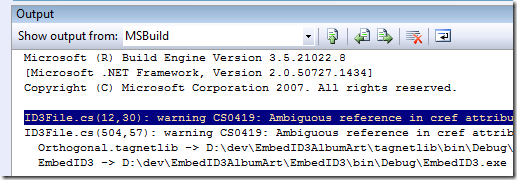

What DOESN'T Work

The only thing that doesn't work for me so far is that the errors reported by MSBuild don't appear neatly parsed in the Error List. For some, this may be a deal breaker. However, the good news is that if you double click on the errors in the Output Window you WILL get taken automatically to the right file and line number which is all I personally care about. Still, sucks.

Gotchas for this Hack

If you're building against a SLN file you can use Project References or manually specified project dependencies otherwise MSBuild has no way to figure out the dependencies and you might get "file locked" or other weird concurrency errors.

Also, even if Project to Project references are set up, sometimes project authors are a bit sloppy (I've done this) and inadvertently access the same file in multiple projects, which can cause spurious file locking/access errors. If you see this behavior, turn off multiproc.

Note also that while you can do builds with MSBuild like this, if you get F5 and start a debug session the VS in-process compilers will do a quick build. It WILL figure out that it doesn't need to do anything, but it will check.

Hey, it's NOT faster for me?

If you have a small solution (<10 projects) and/or your solution is designed such that each project "lines up" in a dependency chain rather than a dependency tree, then there will be less parallelism possible.

If you're using a small number of projects, or you're using Visual Basic on small projects, which is compiling in the background anyway, you might not get big changes. Additionally, remember that we're shelling out n new instances of MSBuild, while VS compiles using the in-process versions of the languages compilers. With a small number of projects, in-proc single-core might be faster.

I've got 900 Projects and Visual Studio is Slow, what's the deal?

"Doctor, doctor, it's hurts what I do that!"

"Then don't do that"

Seriously, yes, there are limits. Personally, if you have more than 50 or so projects, it's time to break them out into sub-system SLN files, and keep the über-solution just for the build server.

The team is aware that if you have hundreds of projects in a solution VS totally breaks down. I, and many others, have made suggestions of things like a "tree of projects" where you could build from one point down, and there's ways you can simulate this with multiple-configurations, but it's kinda lame right now if you have a buttload of projects.

Here's a post I did three years ago called How do you organize your code? and I think it still mostly works today. I was using NAnt at the time, but the same concepts apply to MSBuild. Forgive me now, as I quote myself:

Every 'subsystem' has a folder, and usually (not always) a Subsystem is a bigger namespace. In this picture there's "Common" and it might have 5 projects under it. There's a peer Common.Test. Under CodeGeneration, for example, there's 14 projects. Seven are sub-projects within the CodeGeneration subsystem, and seven are the NUnit tests for each. The reason that you see Common and Common.Test at this stuff is that they are the 'highest." But the pattern follows as you traverse the depths. Each subsystem has it's own NAnt build file, and these are used by the primary default.build.

This is not only a nicer way to lay things out for the project, but also for the people on the project, most of whom are not making cross-subsystem changes.

Action Item for you, Dear Reader

If you have feedback then email the MSBuild team at msbuild@ (you know the company name) and they will listen. I warned them you might be emailing, so be nice. If you DEEPLY want multi-proc builds in the next version of Visual Studio, flood them with your (kind) requests. They are aware of ALL these issues and really want to make things better.

It's interesting as I've been at MSFT about 7 months now to realize that for something like multi-proc builds to work it involves (all) the Compiler Teams, the VS Tooling Teams, and the MSBuild folks. Each has to agree it's important and work towards the goal. Sometimes this is obvious and easy, and other times, less so.

Thanks for Dan Moseley, Cliff Hudson and Cullen Waters for their help!

Related Links

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter