Sharing Authorization Cookies between ASP.NET 4.x and ASP.NET Core 1.0

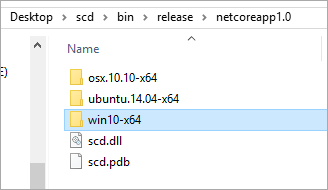

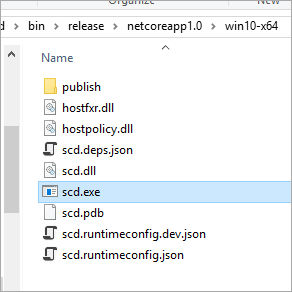

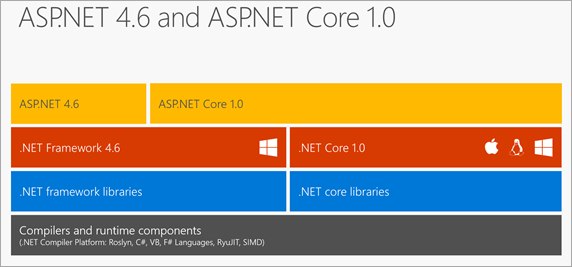

ASP.NET Core 1.0 is out, as is .NET Core 1.0 and lots of folks are making great cross-platform web apps. These are Web Apps that are built on .NET Core 1.0 and run on Windows, Mac, or Linux.

ASP.NET Core 1.0 is out, as is .NET Core 1.0 and lots of folks are making great cross-platform web apps. These are Web Apps that are built on .NET Core 1.0 and run on Windows, Mac, or Linux.

However, some people don't realize that ASP.NET Core 1.0 (that's the web framework bit) runs on either .NET Core or .NET Framework 4.6 aka "Full Framework."

Once you realize that it can be somewhat liberating. If you want to check out the new ASP.NET Core 1.0 and use the unified controllers to make web apis or MVC apps with Razor you can...even if you don't need or care about cross-platform support. Maybe your libraries use COM objects or Windows-specific stuff. ASP.NET Core 1.0 works on .NET Framework 4.6 just fine.

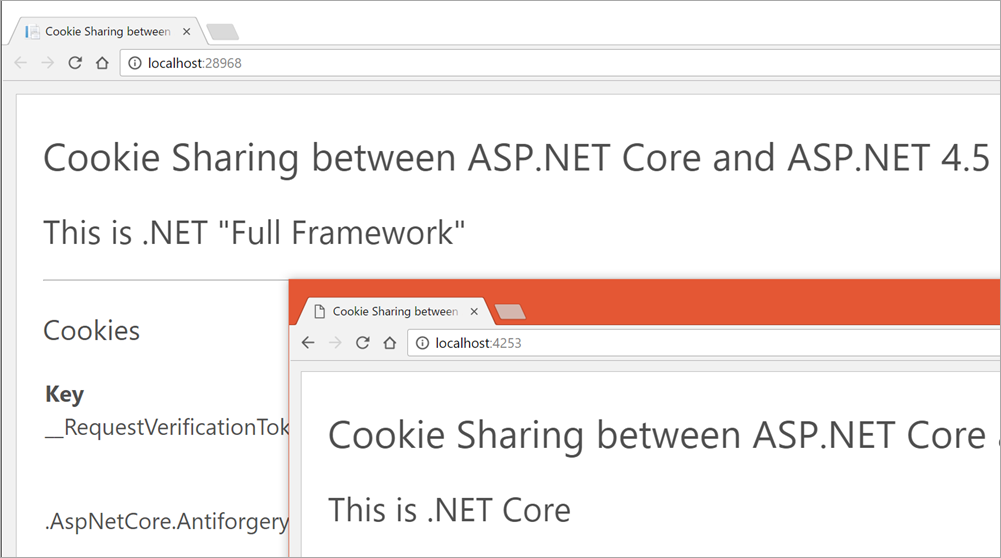

Another option that folks don't consider when talk of "porting" their apps comes up at work is - why not have two apps? There's no reason to start a big porting exercise if your app works great now. Consider that you can have a section of your site by on ASP.NET Core 1.0 and another be on ASP.NET 4.x and the two apps could share authentication cookies. The user would never know the difference.

Barry Dorrans from our team looked into this, and here's what he found. He's interested in your feedback, so be sure to file issues on his GitHub Repo with your thoughts, bugs, and comments. This is a work in progress and at some point will be updated into the official documentation.

Sharing Authorization Cookies between ASP.NET 4.x and .NET Core

Barry is building a GitHub repro here with two sample apps and a markdown file to illustrate clearly how to accomplish cookie sharing.

When you want to share logins with an existing ASP.NET 4.x app and an ASP.NET Core 1.0 app, you'll be creating a login cookie that can be read by both applications. It's certainly possible for you, Dear Reader, to "hack something together" with sessions and your own custom cookies, but please let this blog post and Barry's project be a warning. Don't roll your own crypto. You don't want to accidentally open up one or both if your apps to hacking because you tried to extend auth/auth in a naïve way.

First, you'll need to make sure each application has the right NuGet packages to interop with the security tokens you'll be using in your cookies.

Install the interop packages into your applications.

-

ASP.NET 4.5

Open the nuget package manager, or the nuget console and add a reference to

Microsoft.Owin.Security.Interop. -

ASP.NET Core

Open the nuget package manager, or the nuget console and add a reference to

Microsoft.AspNetCore.DataProtection.Extensions.

Make sure the Cookie Names are identical in each application

Barry is using CookieName = ".AspNet.SharedCookie" in the example, but you just need to make sure they match.

services.AddIdentity<ApplicationUser, IdentityRole>(options =>

{

options.Cookies = new Microsoft.AspNetCore.Identity.IdentityCookieOptions

{

ApplicationCookie = new CookieAuthenticationOptions

{

AuthenticationScheme = "Cookie",

LoginPath = new PathString("/Account/Login/"),

AccessDeniedPath = new PathString("/Account/Forbidden/"),

AutomaticAuthenticate = true,

AutomaticChallenge = true,

CookieName = ".AspNet.SharedCookie"

};

})

.AddEntityFrameworkStores<ApplicationDbContext>()

.AddDefaultTokenProviders();

}

Remember the

CookieNameproperty must have the same value in each application, and theAuthenticationType(ASP.NET 4.5) andAuthenticationScheme(ASP.NET Core) properties must have the same value in each application.

Be aware of your cookie domains if you use them

Browsers naturally share cookies between the same domain name. For example if both your sites run in subdirectories under https://contoso.com then cookies will automatically be shared.

However if your sites run on subdomains a cookie issued to a subdomain will not automatically be sent by the browser to a different subdomain, for example, https://site1.contoso.com would not share cookies with https://site2.contoso.com.

If your sites run on subdomains you can configure the issued cookies to be shared by setting the CookieDomain property in CookieAuthenticationOptions to be the parent domain.

Try to do everything over HTTPS and be aware that if a Cookie has its Secure flag set it won't flow to an insecure HTTP URL.

Select a common data protection repository location accessible by both applications

From Barry's instructions, his sample will use a shared DP folder, but you have options:

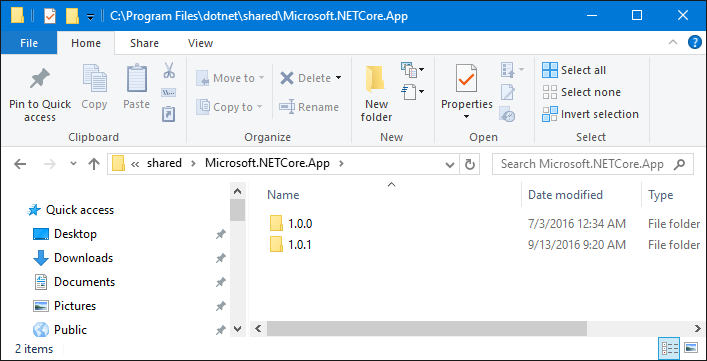

This sample will use a shared directory (C:\keyring). If your applications aren't on the same server, or can't access the same NTFS share you can use other keyring repositories.

.NET Core 1.0 includes key ring repositories for shared directories and the registry.

.NET Core 1.1 will add support for Redis, Azure Blob Storage and Azure Key Vault.

You can develop your own key ring repository by implementing the IXmlRepository interface.

Configure your applications to use the same cookie format

You'll configure each app - ASP.NET 4.5 and ASP.NET Core - to use the AspNetTicketDataFormat for their cookies.

According to his repo, this gets us started with Cookie Sharing for Identity, but there still needs to be clearer guidance on how share the Identity 3.0 database between the two frameworks.

The interop shim does not enabling the sharing of identity databases between applications. ASP.NET 4.5 uses Identity 1.0 or 2.0, ASP.NET Core uses Identity 3.0. If you want to share databases you must update the ASP.NET Identity 2.0 applications to use the ASP.NET Identity 3.0 schemas. If you are upgrading from Identity 1.0 you should migrate to Identity 2.0 first, rather than try to go directly to 3.0.

Sound off in the Issues over on GitHub if you would like to see this sample (or another) expanded to show more Identity DB sharing. It looks to be very promising work.

Sponsor: Big thanks to Telerik for sponsoring the blog this week! 60+ ASP.NET Core controls for every need. The most complete UI toolset for x-platform responsive web and cloud development.Try now 30 days for free!

About Scott

Scott Hanselman is a former professor, former Chief Architect in finance, now speaker, consultant, father, diabetic, and Microsoft employee. He is a failed stand-up comic, a cornrower, and a book author.

About Newsletter